Despite rapid progress in large vision-language models (LVLMs), existing video caption benchmarks remain limited in evaluating their alignment with human understanding. Most rely on a single annotation per video and lexical similarity-based metrics, failing to capture the variability in human perception and the cognitive importance of events. These limitations hinder accurate diagnosis of model capabilities in producing coherent, complete, and human-aligned descriptions. To address this, we introduce FIOVA (Five-In-One Video Annotations), a human-centric benchmark tailored for evaluation. It comprises 3,002 real-world videos (~33.6s each), each annotated independently by five annotators. This design enables modeling of semantic diversity and inter-subjective agreement, offering a richer foundation for measuring human–machine alignment. We further propose FIOVA-DQ, an event-level evaluation metric that incorporates cognitive weights derived from annotator consensus, providing fine-grained assessment of event relevance and semantic coverage. Leveraging FIOVA, we conduct a comprehensive evaluation of nine representative LVLMs and introduce a complexity-aware analysis framework based on inter-annotator variation (CV). This reveals consistency gaps across difficulty levels and identifies structural issues such as event under-description and template convergence. Our results highlight FIOVA’s diagnostic value for understanding LVLM behavior under varying complexity, setting a new standard for cognitively aligned evaluation in long-video captioning. The benchmark, annotations, metric, and model outputs are publicly released to support future evaluation-driven research in video understanding.

(1) Human-centric dataset with multi-annotator diversity: We introduce FIOVA, a benchmark comprising 3,002 real-world videos (average 33.6 seconds) covering 38 themes with complex spatiotemporal dynamics. Each video is annotated by five independent annotators, capturing diverse perspectives and establishing a cognitively grounded baseline for human-level video description. This design enables modeling of semantic variability and inter-subjective agreement, offering an authentic testbed for evaluating long video comprehension.

(2) Comprehensive evaluation of nine representative LVLMs: We evaluate nine leading LVLMs, including GPT-4o, InternVL-2.5, and Qwen2.5-VL, across both full and high-complexity (FIOVAhard) subsets. Our tri-layer evaluation framework integrates traditional lexical metrics, event-level semantic alignment (AutoDQ), and cognitively weighted evaluation (FIOVA-DQ), revealing trade-offs between precision and recall as well as consistent model weaknesses under narrative ambiguity.

(3) Complexity-aware human–machine comparison with cognitive alignment: We propose a novel analysis framework based on inter-annotator variation (CV) to categorize videos by difficulty, enabling batch-level diagnosis of model robustness and behavior patterns. Our findings show that while humans increase descriptive diversity under complexity, LVLMs often converge to rigid, template-like outputs. FIOVA-DQ effectively captures this divergence by emphasizing event importance and aligning better with human judgment.

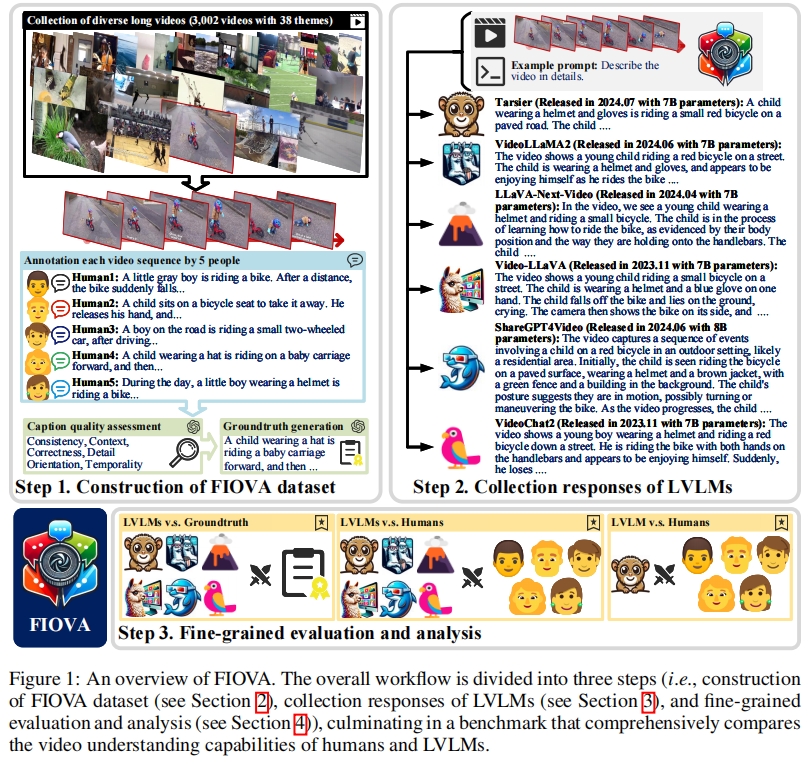

We introduce FIOVA (Five-In-One Video Annotations), a benchmark specifically constructed to systematically evaluate the semantic and cognitive alignment of large vision-language models (LVLMs) in long-video comprehension tasks. The dataset comprises 3,002 real-world videos (average duration: 33.6 seconds), covering 38 thematic categories. Each video was independently annotated by five human annotators based solely on the visual content, excluding audio or subtitles. This multi-annotator design supports modeling of semantic diversity and inter-subjective agreement, providing a solid foundation for human-aligned evaluation.

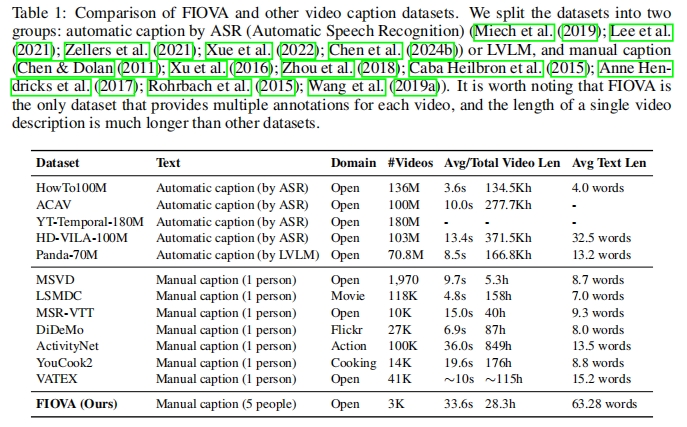

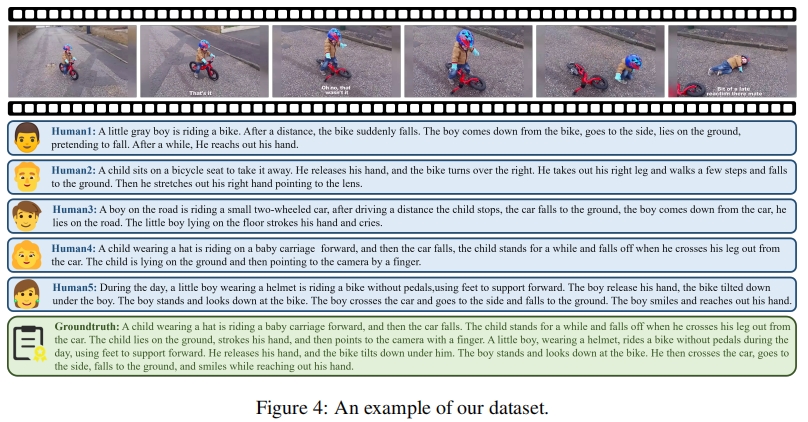

Compared to existing datasets, FIOVA offers substantial advantages in annotation richness and detail: each video is described using paragraph-level captions averaging 63.3 words, which is 4–15× longer than captions in mainstream benchmarks (see Table 1). To construct a unified reference, we fuse the five independent annotations into a single groundtruth caption using a GPT-based synthesis process. This groundtruth preserves key events, contextual details, and temporal order, enabling robust model evaluation (see Figure 4).

To assess annotation consistency, we score the five human captions for each video along five semantic dimensions: consistency, context, correctness, detail orientation, and temporality. We then compute the coefficient of variation (CV) across annotators for each video and use it to group the dataset into eight complexity levels (Groups A–H). Group A contains videos with the highest annotator agreement, while Group H includes highly ambiguous or subjective cases with substantial annotation disagreement.

Based on this grouping, we define a challenge subset FIOVAhard, which consists of Groups F–H. These videos are characterized by frequent scene transitions, multi-perspective narratives, and low human agreement, making them ideal for stress-testing LVLMs under cognitively demanding conditions.

To construct the FIOVA benchmark, we collected video captions generated by six state-of-the-art large vision-language models (LVLMs): VideoLLaMA2, LLaVA-NEXT-Video, Video-LLaVA, VideoChat2, Tarsier, and ShareGPT4Video. Each model was prompted with the same task: generate a detailed caption describing the visual content of 3,002 videos from the FIOVA dataset.

For consistency and fairness, we applied unified prompting strategies and carefully tuned generation parameters for each model, including temperature, max token length, and sampling method. All models were run on 8-frame video inputs. In total, 18,012 model responses were collected, forming a large-scale corpus of video-description-response triplets. This collection enables structured evaluation of video understanding capabilities and direct comparison with human-annotated groundtruths.

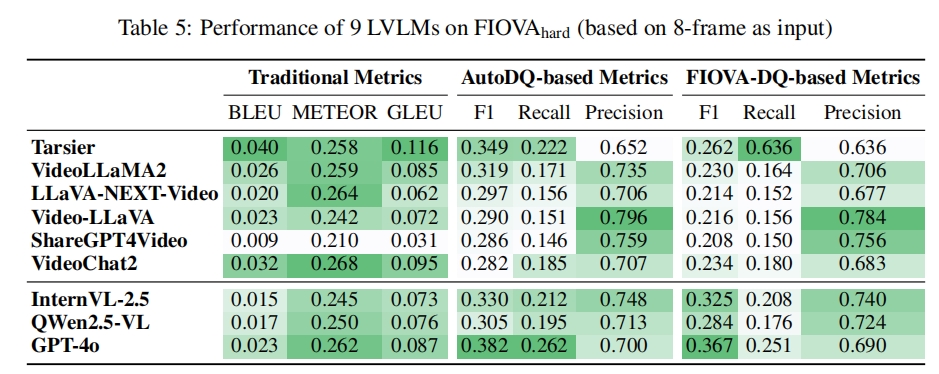

To complement our main evaluation, we further perform stress testing on FIOVAhard—a subset comprising the most semantically complex videos with low annotator agreement (Groups F–H). Beyond the six baseline models, we additionally include three frontier LVLMs—InternVL-2.5, Qwen2.5-VL, and GPT-4o—exclusively on this subset. This extended setting enables a focused assessment of model robustness and alignment under cognitively challenging conditions.

Combined with our cognitively weighted event-level evaluation framework FIOVA-DQ (see Figure 4), this comprehensive model response collection provides a foundation for in-depth analysis of model behaviors, failure modes, and human alignment across both typical and challenging video scenarios.

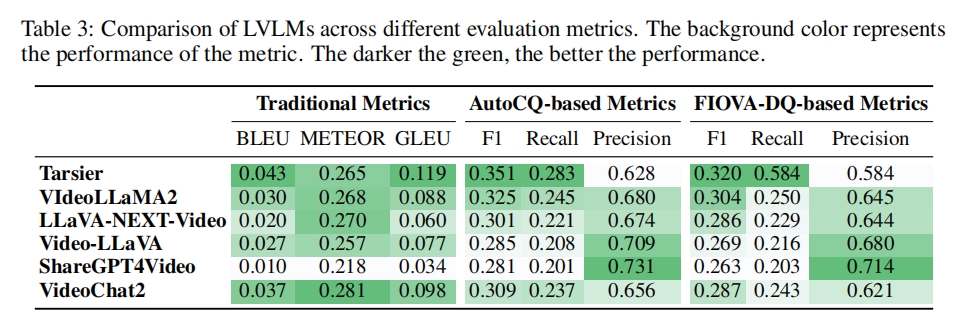

We evaluate the six baseline LVLMs using three complementary families of metrics: traditional lexical metrics (BLEU, METEOR, GLEU), the event-based AutoDQ framework, and our proposed cognitively weighted FIOVA-DQ. Each metric captures different aspects of model output quality—surface fluency, structural completeness, and human-aligned semantic relevance, respectively.

As shown in Table 3, Tarsier achieves the highest F1 and recall under both AutoDQ and FIOVA-DQ, indicating strong event coverage. However, this comes at the cost of lower precision due to frequent overgeneration. In contrast, ShareGPT4Video attains the highest precision across both metrics but suffers from the lowest recall, revealing a conservative captioning strategy that often omits important content.

Models such as VideoLLaMA2 and LLaVA-NEXT-Video fall between these two extremes, exhibiting moderate trade-offs. Importantly, our evaluation confirms that FIOVA-DQ achieves the strongest correlation with human preference (Spearman ρ = 0.579), validating its effectiveness as a cognitively grounded metric.

These results highlight the limitations of relying solely on traditional lexical overlap and emphasize the value of semantically and cognitively informed evaluation frameworks for diagnosing model behaviors in complex video captioning tasks.

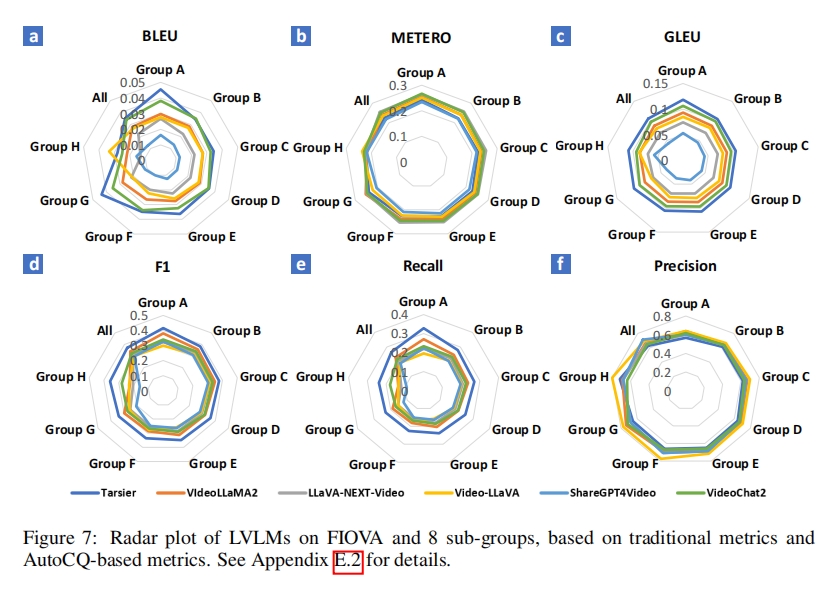

To examine model robustness under different levels of semantic complexity, we divide the FIOVA dataset into eight sub-groups (Groups A–H) based on the coefficient of variation (CV) across six annotation dimensions. These groups reflect varying degrees of annotator agreement and thus serve as a proxy for input ambiguity and difficulty.

We evaluate each LVLM’s performance within these groups using both AutoDQ and FIOVA-DQ metrics. As shown in Figure 7, Tarsier achieves the highest recall and F1 scores across most groups, demonstrating strong temporal modeling. In contrast, ShareGPT4Video consistently yields the highest precision but at the cost of lower recall, reflecting a conservative captioning strategy. All models exhibit clear performance drops in Group H—which contains the most cognitively complex videos—revealing that current LVLMs still struggle with multi-event, ambiguous scenarios.

These results reveal distinct behavioral strategies: models like Tarsier tend to prioritize recall and semantic coverage, while others like ShareGPT4Video favor precision and stylistic consistency. This trade-off highlights the importance of building balanced LVLMs that can adapt to both simple and complex video inputs.

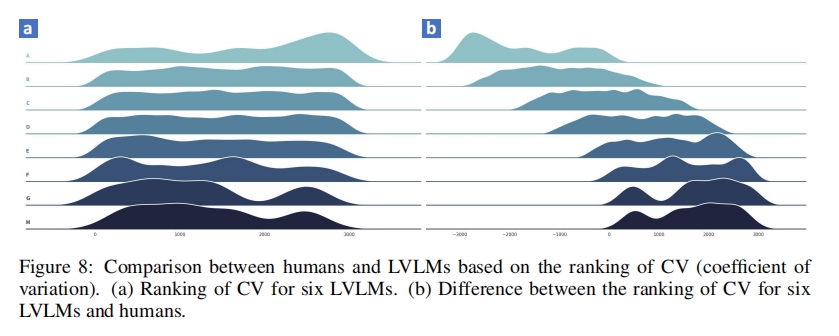

In Figure 8, we further analyze consistency trends by comparing CV rankings between humans and models. Interestingly, models show higher variability in simple videos (Groups A–B), whereas human annotations become more diverse in complex videos (Groups F–H). This inverse trend suggests that LVLMs resort to safer, template-like generations under uncertainty, while humans diversify their interpretations—underscoring the need for cognitively aligned evaluation frameworks.

We evaluate nine representative LVLMs on FIOVAhard—a high-complexity subset including Groups F–H—to diagnose model performance under cognitively demanding conditions. As shown in Table 5, Tarsier achieves the highest recall (0.636) in FIOVA-DQ, reflecting strong event coverage. However, its lower precision leads to a reduced F1 score (0.262). In contrast, GPT-4o attains the best overall F1 score (0.367), balancing recall and precision, while InternVL-2.5 and Qwen2.5-VL achieve the highest precision (0.740 and 0.724 respectively), indicating conservative but accurate captioning.

These results show that while some models excel at identifying more events (high recall), others favor accuracy (high precision). FIOVA-DQ highlights this trade-off and better reflects human alignment than lexical metrics.

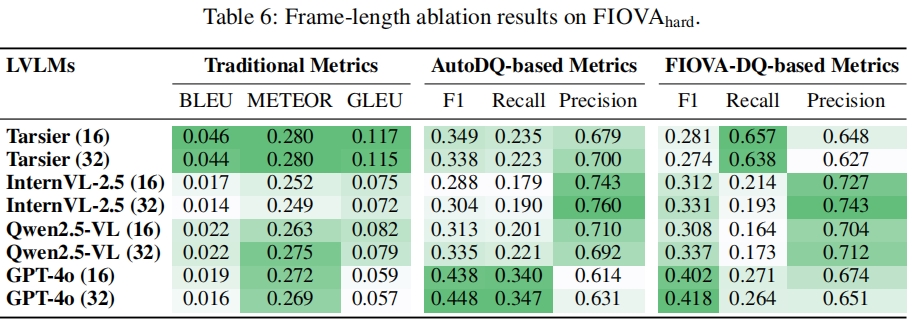

To explore temporal sensitivity, we further conduct frame-length ablation on selected models (Table 6). GPT-4o shows significant gains in both F1 (↑ from 0.367 to 0.418) and recall (↑ from 0.251 to 0.264) when increasing input length from 8 to 32 frames, confirming its ability to leverage temporal context. InternVL-2.5 maintains high precision (↑ to 0.743), while Tarsier remains recall-oriented but plateaus in F1. These results indicate that longer temporal context can enhance performance for advanced models, while others may saturate.

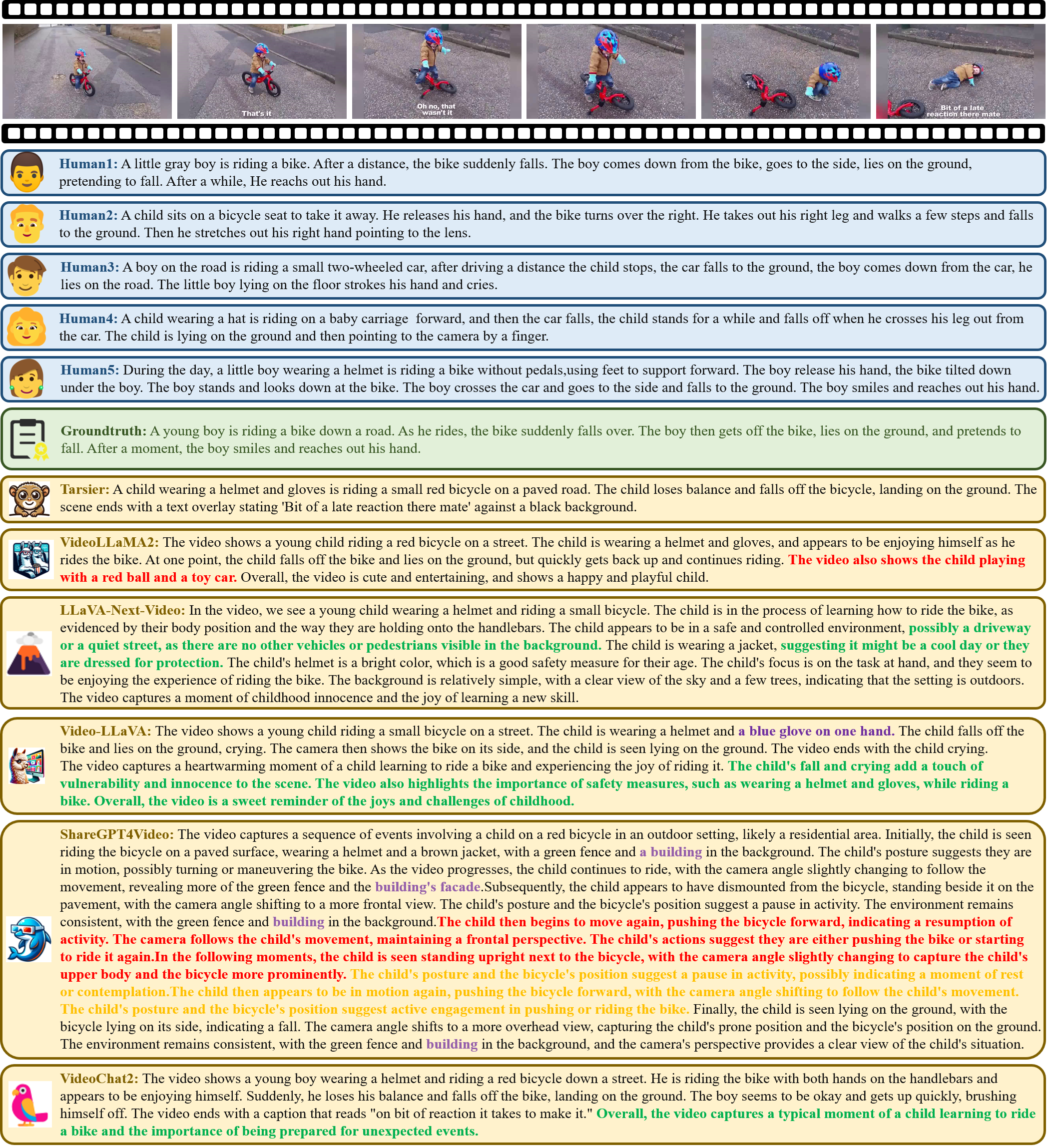

The following examples illustrate five common error types in LVLM-generated captions, each highlighted using a specific color:

By categorizing and visualizing these errors, we provide a structured lens for analyzing the reliability of model-generated descriptions, and offer diagnostic insights into improving future LVLM performance.

Analysis:

Human performance in video description tasks demonstrates remarkable consistency, especially in simpler scenarios where humans can effectively capture key content and provide accurate descriptions with minimal variation. In contrast, LVLMs exhibit significant limitations in these scenarios, often struggling to identify critical details and failing to match human descriptive ability. This discrepancy stems from the models' inability to fully comprehend the overall context and integrate video events with background information, which are essential for accurate and coherent descriptions. Among the LVLMs, models like LLaVA-NEXT-Video, Video-LLaVA, and VideoChat2 frequently exhibit issues of redundancy in their outputs. ShareGPT4Video shows pronounced hallucinations and repetitive descriptions, further highlighting its challenges in maintaining precision. Tarsier, while avoiding hallucination and excessive redundancy, suffers from omissions, such as neglecting the actions occurring after the boy lies on the ground. These findings underscore the persistent gap between LVLMs and human performance, particularly in scenarios requiring detailed understanding and contextual integration.