About Me

Hi there, I am Shiyu Hu (胡世宇)!

Currently, I am a Research Fellow at Nanyang Technological University (NTU), working with Prof. Kang Hao Cheong. Before that, I got my Ph.D. degree at Institute of Automation, Chinese Academy of Sciences (中国科学院自动化研究所) and University of Chinese Academy of Sciences (中国科学院大学) in Jan. 2024, supervised by Prof. Kaiqi Huang (黄凯奇) (IAPR Fellow), co-supervised by Prof. Xin Zhao (赵鑫). I received my master’s degree from the Department of Computer Science, the University of Hong Kong (HKU) under the supervision of Prof. Choli Wang (王卓立).

📣 If you are interested in my research direction or hope to cooperate with me, feel free to contact me! Online or offline cooperations are all welcome (shiyu.hu@ntu.edu.sg).

🔥 News

2025.12: 📣We will conduct a Mini-Symposium (topic: Complex Network Systems and Large Language Models) on NODYCON 2026 (The Fifth International Nonlinear Dynamics Conference), more information will be released soon.

2025.11: 📝Three papers have been accepted by the AI for Education Workshop in the 40th Annual AAAI Conference on Artificial Intelligence (AAAIW).

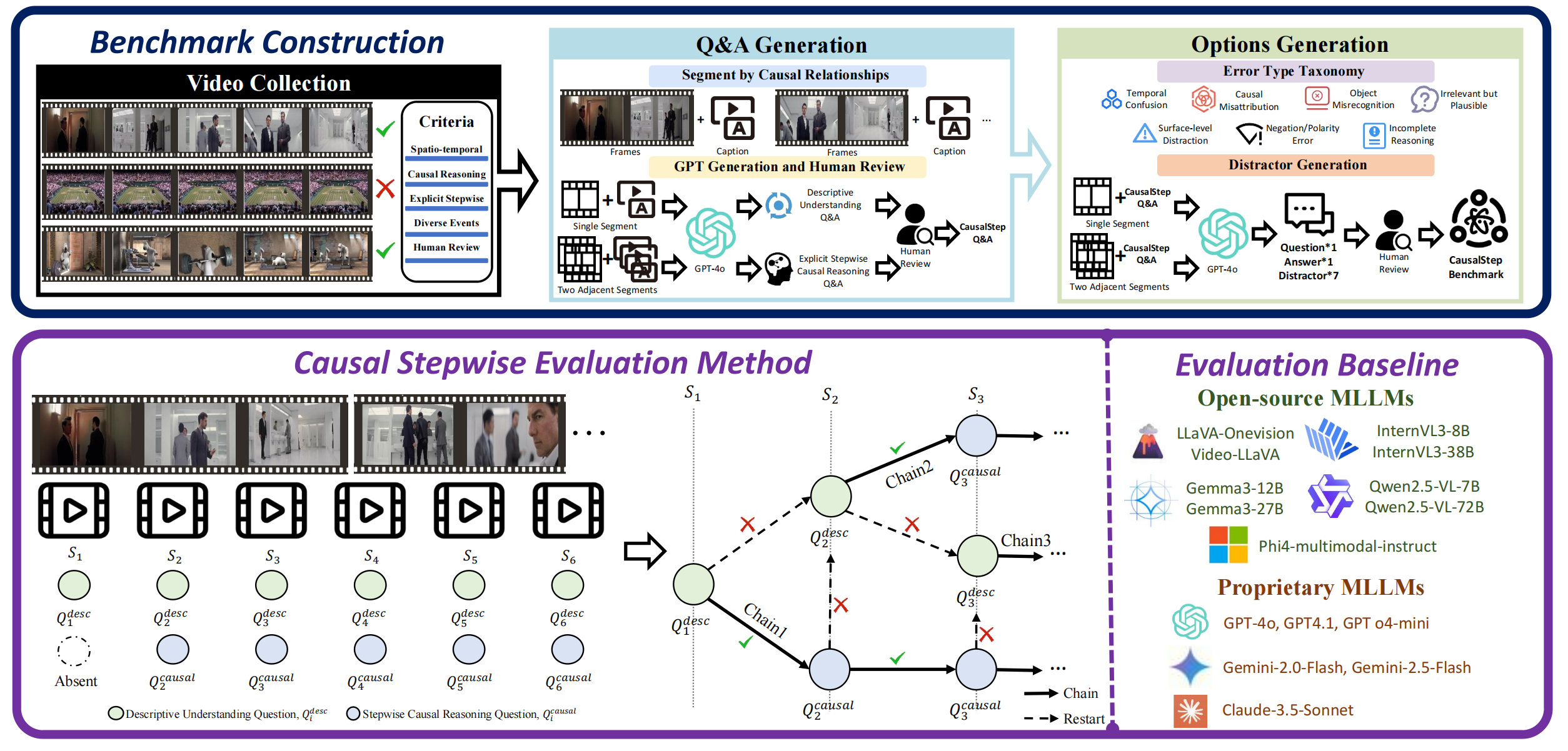

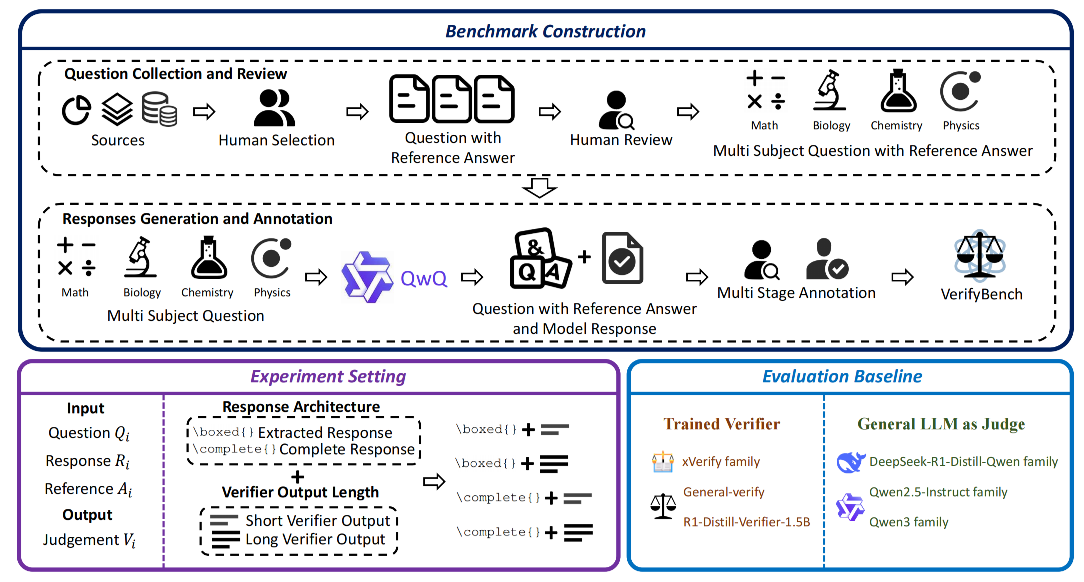

2025.11: 📝Two papers (CausalStep and VerifyBench) have been accepted by the 40th Annual AAAI Conference on Artificial Intelligence (AAAI, CCF-A Conference, Oral).

2025.10: 📣We have conducted a tutorial at 28th European Conference on Artificial Intelligence (ECAI) (26th October, 2025, Bologna, Italy).

2025.10: 📣We have conducted a tutorial at 2025 IEEE International Conference on Systems, Man, and Cybernetics (SMC) (5th October, 2025, Vienna, Austria).

2025.08: 📣We have conducted a tutorial at 34th International Joint Conference on Artificial Intelligence (IJCAI) (18th August, 2025, Montreal, Canada).

2025.07: 🏆Obtain IEEE SMCS TEAM Program Award.

2025.06: 📝One co-first author paper (ATCTrack) has been accepted by International Conference on Computer Vision (ICCV, CCF-A conference, Highlight).

2025.05: 📝One review paper has been accepted by Computers and Education: Artificial Intelligence.

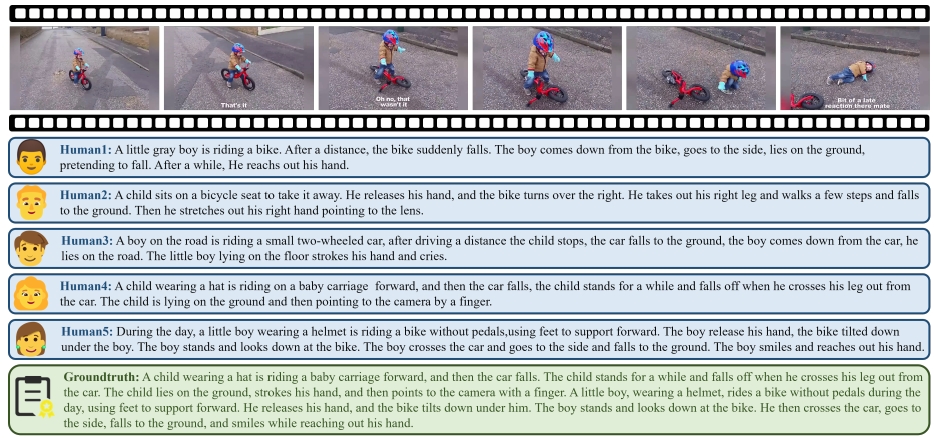

2025.05: 📣Our new work FIOVA is now online! We introduce a multi-annotator benchmark for human-aligned video captioning, supporting semantic diversity and cognitive-aware evaluation. Check out the project page and arXiv paper for more details.

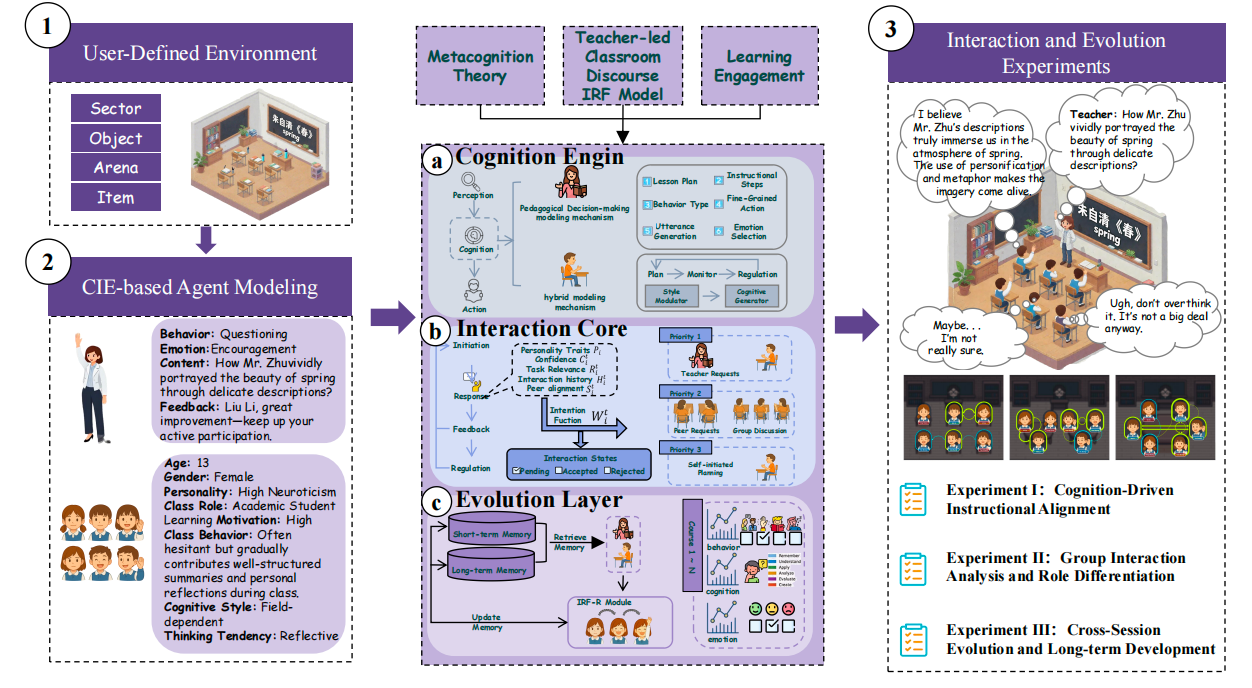

2025.05: 📣Our updated work SOEI now available! Building upon our previous framework, this version introduces interactive multi-turn simulation to model open-ended educational dialogues with cognitively plausible virtual students. We further validate the framework’s effectiveness through behavioral analysis, personality recognition, and teacher-student reflection. Read more in the arXiv paper.

2025.05: 📣We will present our work (SOTVerse) at IJCV2024 during the VALSE2025 poster session (June 2025, Zhuhai, China).

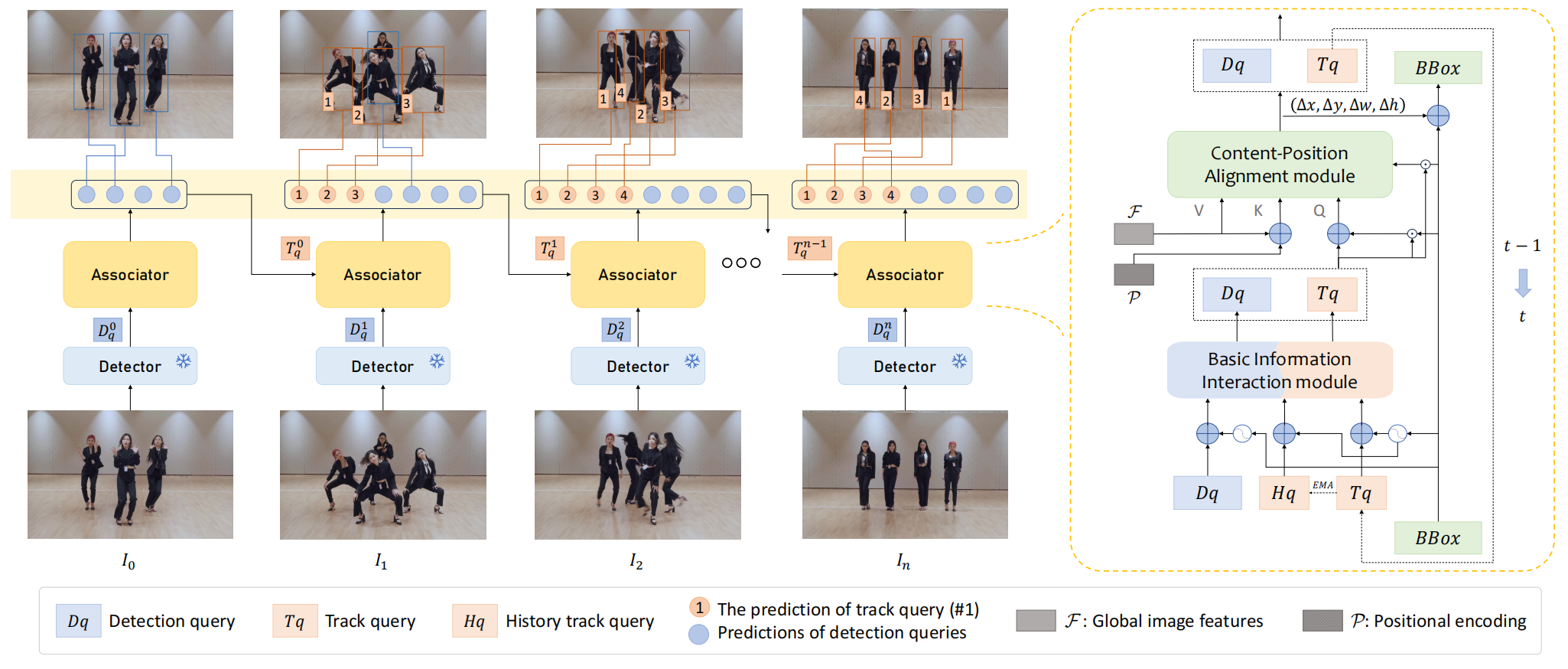

2025.05: 📝One paper (CSTrack) has been accepted by International Conference on Machine Learning (ICML, CCF-A conference).

2025.05: 📝One corresponding paper (DARTer) has been accepted by International Conference on Multimedia Retrieval (ICMR, CCF-B conference).

2025.05: 📝One corresponding paper (MSAD) has been accepted by IET Computer Vision (IET-CVI, CCF-C journal).

2025.04: 📣We will conduct a tutorial at 28th European Conference on Artificial Intelligence (ECAI) (25th-30th October, 2025, Bologna, Italy).

2025.03: 📣We will conduct a tutorial at 2025 IEEE International Conference on Systems, Man, and Cybernetics (SMC) (5th-8th October, 2025, Vienna, Austria).

2025.02: 📖The book Visual Object Tracking: An Evaluation Perspective is online.

2025.01: 📝One paper (CTVLT) has been accepted by IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP, CCF-B conference).

2025.01: 📣A special issue (Techniques and Applications of Multimodal Data Fusion) in Electronics has been announced, all papers related to this topic are welcomed for submission!

2024.12: 📣We have conducted a tutorial at Asian Conference on Computer Vision (ACCV) (Dec. 9th 2024, Hanoi, Vietnam).

2024.12: 📣We have prepared a tutorial at International Conference on Pattern Recognition (ICPR) (Dec. 1st 2024, Kolkata, India).

👩💻 Experiences

2024.08 - Now : Research Fellow at Nanyang Technological University (NTU)

- Direction: AI4Science, Computer Vision

- PI: Prof. Kanghao Cheong

2018.03 - 2018.11 : Research Assistant at University of Hong Kong (HKU)

- Direction: High Performance Computing, Heterogeneous Computing

- PI: Prof. Choli Wang

2016.08 - 2016.09 : Research Intern at Institute of Electronics, Chinese Academy of Sciences (CASIE)

📖 Educations

2019.09 - 2024.01 : Ph.D. in Institute of Automation, Chinese Academy of Sciences (CASIA) and University of Chinese Academy of Sciences (UCAS)

- Major: Computer Applied Technology

- Supervisor: Prof. Kaiqi Huang (IAPR Fellow, IEEE Senior Member, 10,000 Talents Program - Leading Talents)

- Co-supervisor: Prof. Xin Zhao (IEEE Senior Member, Beijing Science Fund for Distinguished Young Scholars)

- Thesis Title: Research of Intelligence Evaluation Techniques for Single Object Tracking

- Thesis Committee: Prof. Jianbin Jiao, Prof. Yuxin Peng (The National Science Fund for Distinguished Young Scholars), Prof. Yao Zhao (IEEE Fellow, IET Fellow, The National Science Fund for Distinguished Young Scholars), Prof. Yunhong Wang (IEEE Fellow, IAPR Fellow, CCF Fellow), Prof. Ming Tang

- Thesis Defense Grade: Excellent

2017.09 - 2019.06 : M.S. in Department of Computer Science, University of Hong Kong (HKU)

- Major: Computer Science

- Supervisor: Prof. Choli Wang

- Thesis Title: NightRunner: Deep Learning for Autonomous Driving Cars after Dark [🌐Project]

- Thesis Defense Grade: A+

2013.09 - 2017.06 : B.E. in Elite Class in School of Information and Electronics, Beijing Institute of Technology (BIT)

- Major: Information Engineering

- Supervisor: Prof. Senlin Luo

- Thesis Title: Text Sentiment Analysis Based on Deep Neural Network

- Thesis Defense Grade: Excellent

2015.07 - 2015.08 : Summer Semester in University of California, Berkeley (UCB)

- Major: New Media

- Course Grade: A

🔍️ Research Interests

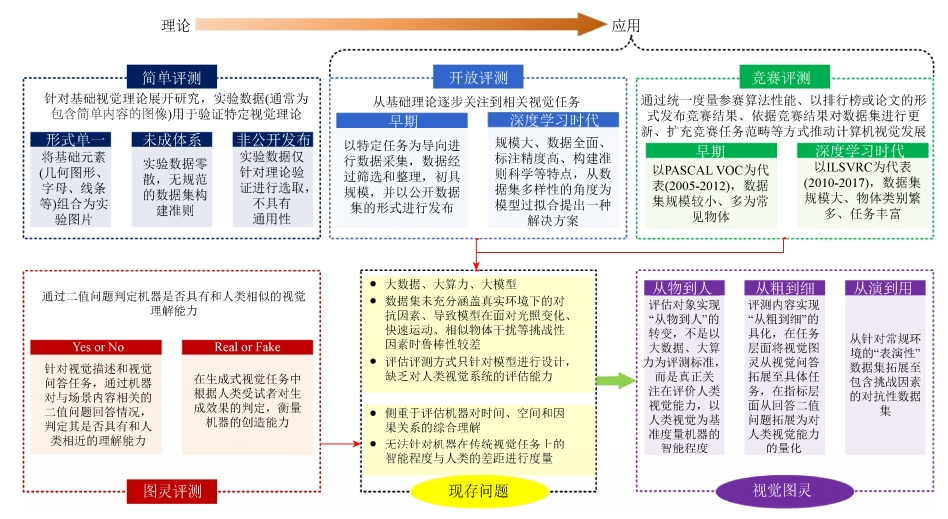

Research Foundation

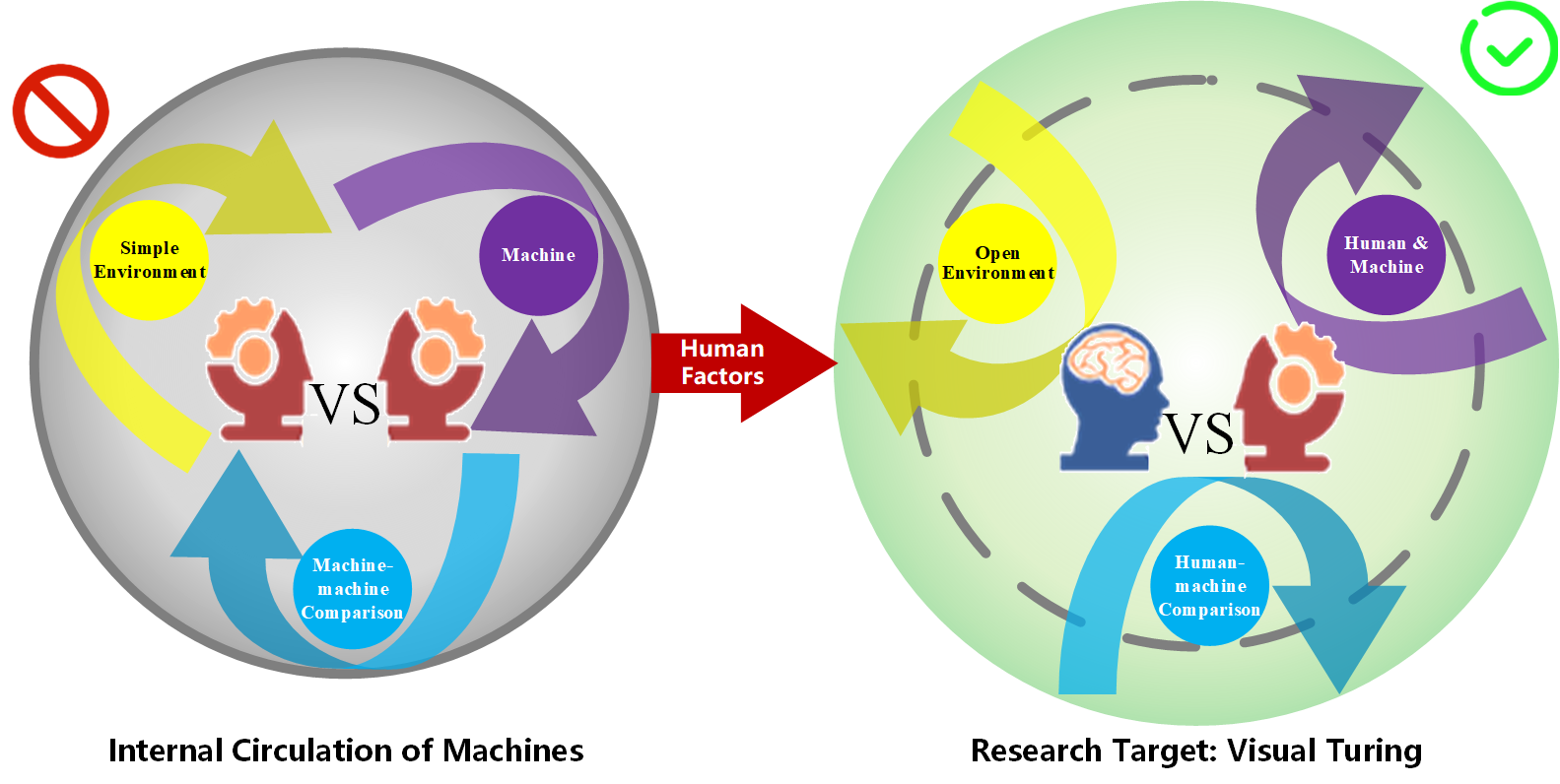

My research has long focused on evaluating and modeling machine vision intelligence, covering task modeling, environment construction, evaluation techniques, and human–machine comparisons. I firmly believe that the development of artificial intelligence is inherently intertwined with human factors. Inspired by the classical Turing Test, I have extended this concept to visual understanding, proposing the Visual Turing Test as a human-centered framework for evaluating dynamic vision tasks. The overarching goal is to benchmark machine visual intelligence against human abilities, building trustworthy and explainable evaluation systems that advance us toward secure and reliable Artificial General Intelligence (AGI).

1️⃣ What abilities define human perception? Designing more human-like visual tasks

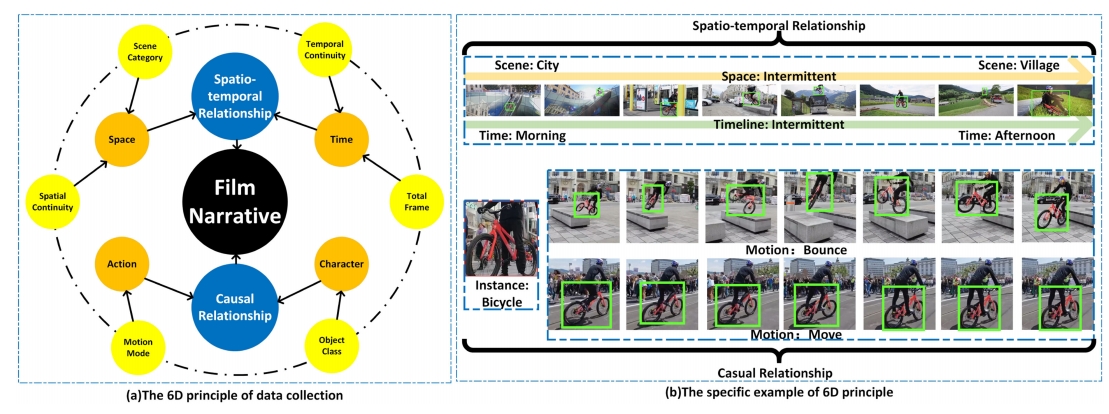

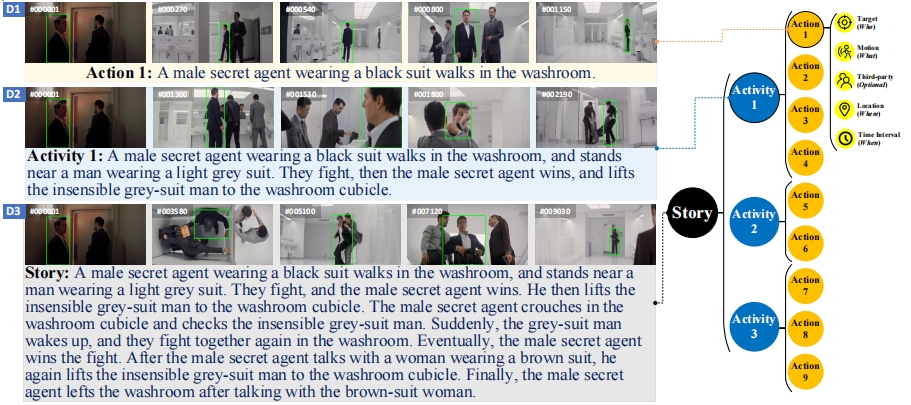

I take Visual Object Tracking (VOT) as a representative task to explore the boundaries of machine dynamic visual ability. Traditional VOT is limited by its assumption of continuous motion, which fails to align with human cognitive tracking capabilities. To overcome this, I proposed Global Instance Tracking (GIT) — a humanoid-inspired reformulation that shifts tracking from a short-term perceptual level to a long-term cognitive level. Building on this, I introduced Multi-modal GIT (MGIT) by incorporating hierarchical semantic structures, enabling machines to perform visual reasoning over complex spatio-temporal causal relationships. Together, these extensions mark a transition from perceptual recognition to cognitive understanding.

2️⃣ What environments do humans perceive? Constructing more open and realistic visual spaces

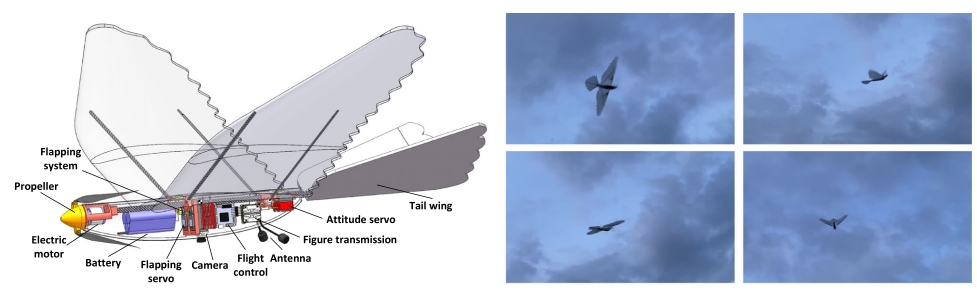

Human environments are dynamic, continuous, and semantically rich, yet most datasets remain static and task-limited. To address this, I developed the VideoCube benchmark by integrating narrative theory to decompose video content into interpretable units. Building further, I proposed SOTVerse, a large-scale and open task space (12.56M frames) that enables flexible subspace generation for evaluating visual generalization across diverse conditions. To tackle visual robustness in real-world dynamics, I further developed BioDrone — the first bio-inspired flapping-wing drone benchmark — providing a novel testing ground for robust visual intelligence under motion perturbations and environmental challenges.

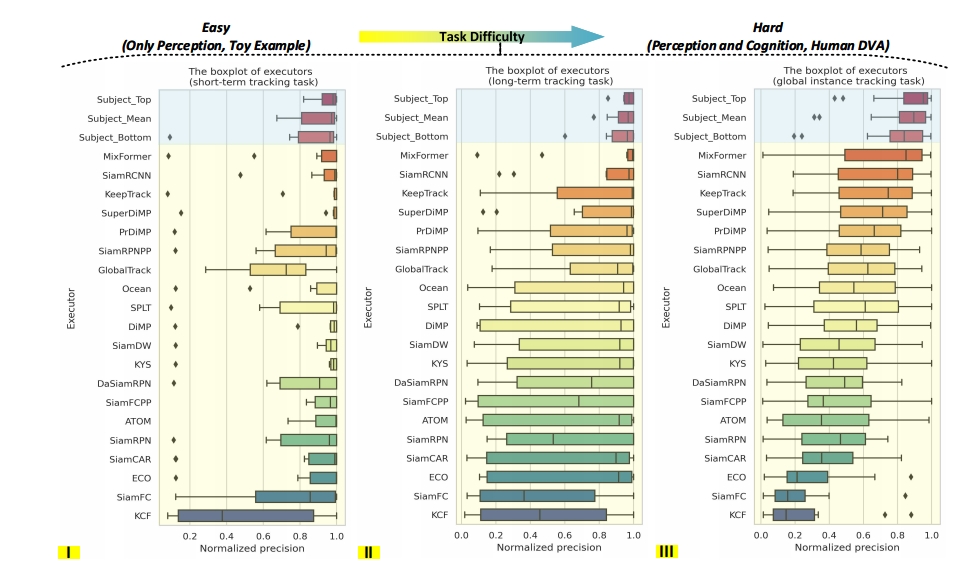

3️⃣ How large is the human–machine gap? Benchmarking machine vision against human ability

While computer scientists evaluate models on large datasets and neuroscientists assess humans in controlled experiments, this disciplinary gap prevents unified human–machine evaluation. To bridge this, I constructed a unified evaluation environment based on SOTVerse, enabling direct human–machine comparisons in perception, cognition, and robustness. Results reveal that recent algorithms are closing the gap with human subjects, with humans excelling in semantic understanding and machines in precision and persistence. This complementary behavior suggests the emerging potential for human–machine collaborative intelligence in dynamic vision.

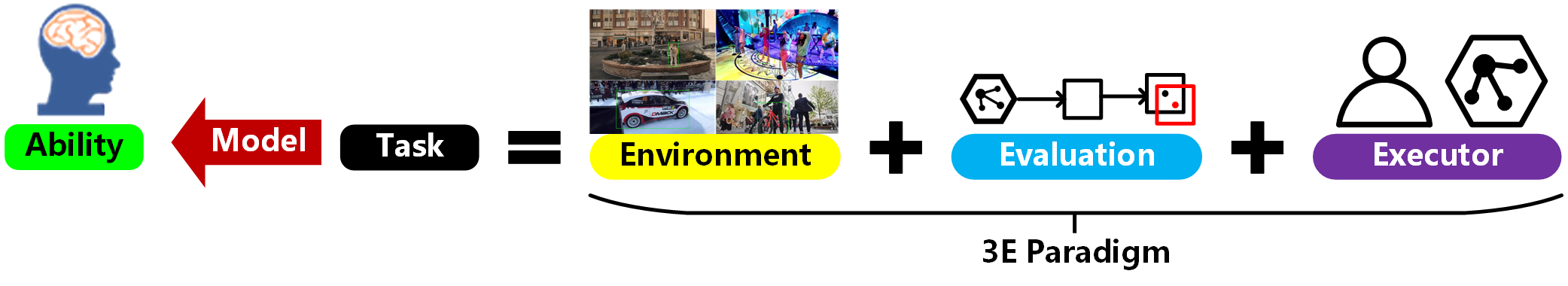

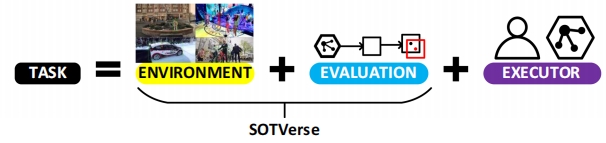

This human-centered evaluation paradigm is formalized as the 3E Framework — Environment, Evaluation, and Executors — forming a closed loop that defines, measures, and evolves intelligent behavior. Machines acquire human-like abilities by iteratively performing humanoid proxy tasks within evolving environments and evaluation criteria. Through this iterative mechanism, their cognitive upper bounds are continuously improved, laying the foundation for building evaluative, explainable, and human-aligned intelligence.

Current Research Interests

Visual Intelligence

- Focuses on visual intelligence as the core channel to study how AI systems perceive, reason, and interpret in complex environments.

- Builds interpretable and generalizable cognitive evaluation frameworks under the “Environment–Task–Executor” paradigm.

- Explores unified quantitative models for robustness, generalization, and safety, promoting a paradigm shift from performance-driven to cognition-driven evaluation.

- Investigates human-referenced measurement principles of intelligence to support the development of human–AI integrated cognitive systems.

Multimodal Cognition

- Investigates the structural role of vision within multimodal cognition, exploring unified mechanisms for cross-modal fusion and spatiotemporal reasoning.

- Develops multiscale models from perception to semantics to reveal intrinsic connections among vision, language, and knowledge.

- Studies semantic diversity, causal associations, and narrative generation to build explainable and generalizable multimodal understanding frameworks.

- Advances visual understanding from static perception toward dynamic cognition, providing a structural foundation for next-generation multimodal intelligence.

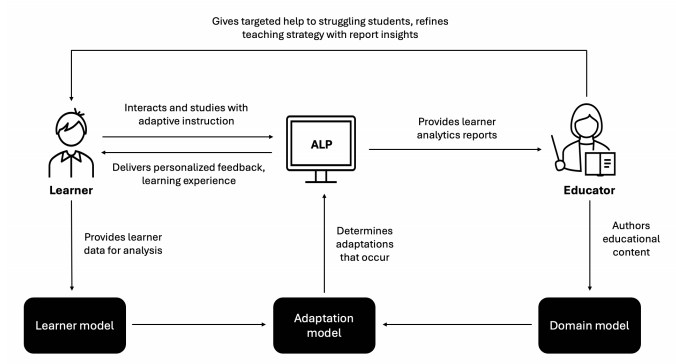

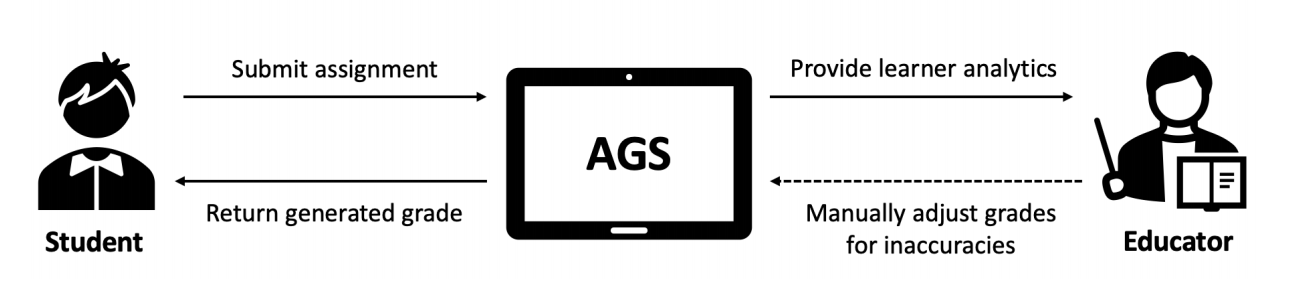

AI4Edu

- Positions educational environments as ideal domains for studying human–AI co-evolution and cognitive learning mechanisms.

- Focuses on intelligent agents with personality, cognition, and social adaptability, emphasizing cognitive tracking, personalized feedback, and adaptive learning.

- Explores multi-agent collaboration and reflective learning mechanisms, enabling human–AI interaction with understanding, empathy, and shared growth.

- Promotes the transformation of educational AI from an assistive tool to a cognitive partner, fostering educational equity, innovation, and sustainable learning.

AI4Science

- Explores the cognitive modeling pathways of AI in scientific discovery, experimental design, and knowledge reasoning.

- Studies AI’s cognitive role in scientific understanding, data modeling, and hypothesis generation, abstracting cognitive principles from human reasoning.

- Constructs integrated vision–language–symbol frameworks for scientific intelligence, bridging computational learning and human scientific cognition.

- Advances interdisciplinary applications of AI in education, medicine, psychology, and cognitive science toward the co-evolution of artificial and human intelligence.

📝 Publications

Book

Visual Object Tracking: An Evaluation Perspective

X. Zhao, Shiyu Hu, X. Yin

Springer, Part of the book series: Advances in Computer Vision and Pattern Recognition (ACVPR)

📌 Visual Object Tracking 📌 Intelligent Evaluation Technology

📃 Book

Accept

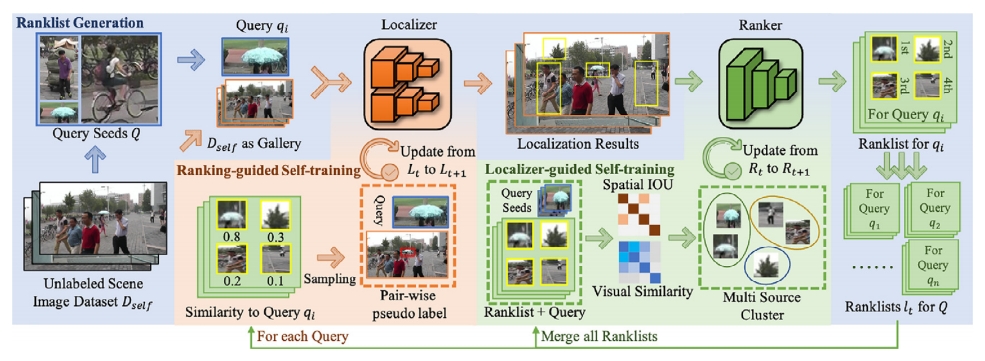

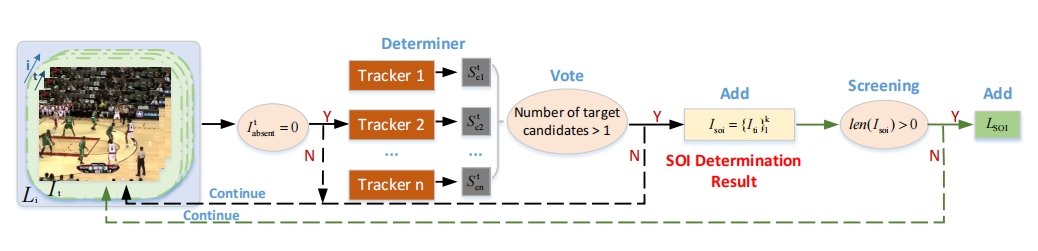

Global Instance Tracking: Locating Target More Like Humans

Shiyu Hu, X. Zhao, L. Huang, K. Huang

IEEE Transactions on Pattern Analysis and Machine Intelligence (CCF-A Journal)

📌 Visual Object Tracking 📌 Large-scale Benchmark Construction 📌 Intelligent Evaluation Technology

📃 Paper 📑 PDF 🪧 Poster 🌐 Platform 🔧 Toolkit 💾 Dataset

SOTVerse: A User-defined Task Space of Single Object Tracking

Shiyu Hu, X. Zhao, K. Huang

International Journal of Computer Vision (CCF-A Journal)

📌 Visual Object Tracking 📌 Dynamic Open Environment Construction 📌 3E Paradigm

📃 Paper 📑 PDF 🪧 Poster 🌐 Platform

BioDrone: A Bionic Drone-based Single Object Tracking Benchmark for Robust Vision

X. Zhao, Shiyu Hu✉️, Y. Wang, J. Zhang, Y. Hu, R. Liu, H. Lin, Y. Li, R. Li, K. Liu, J. Li

International Journal of Computer Vision (CCF-A Journal)

📌 Visual Object Tracking 📌 Drone-based Tracking 📌 Visual Robustness

📃 Paper 🌐 Platform 📑 PDF 🔧 Toolkit 💾 Dataset

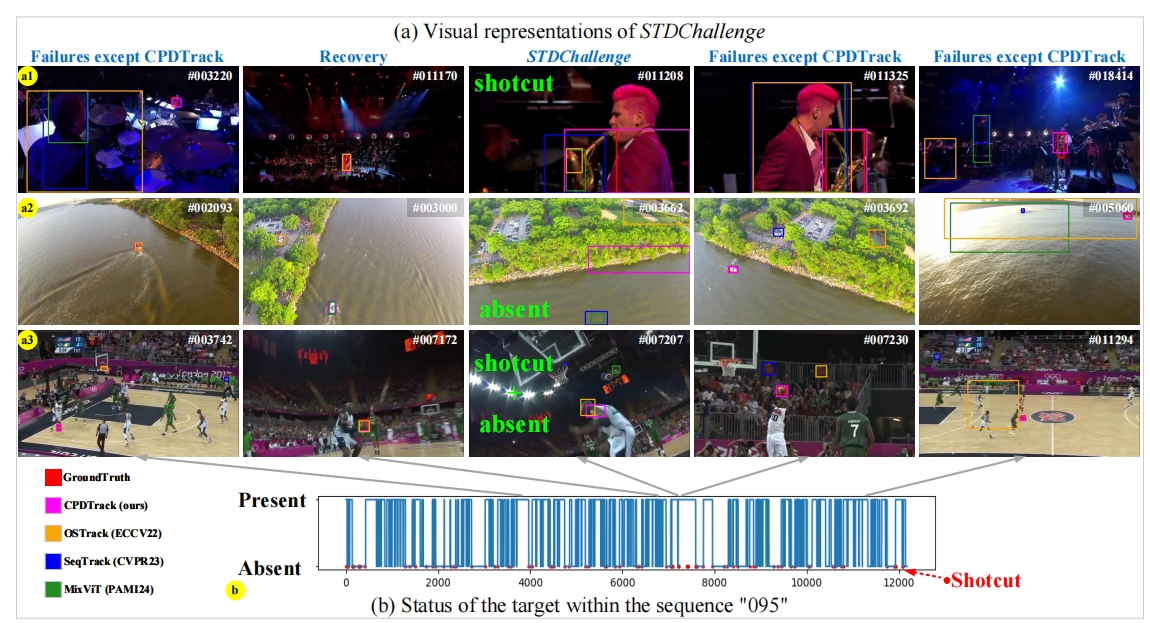

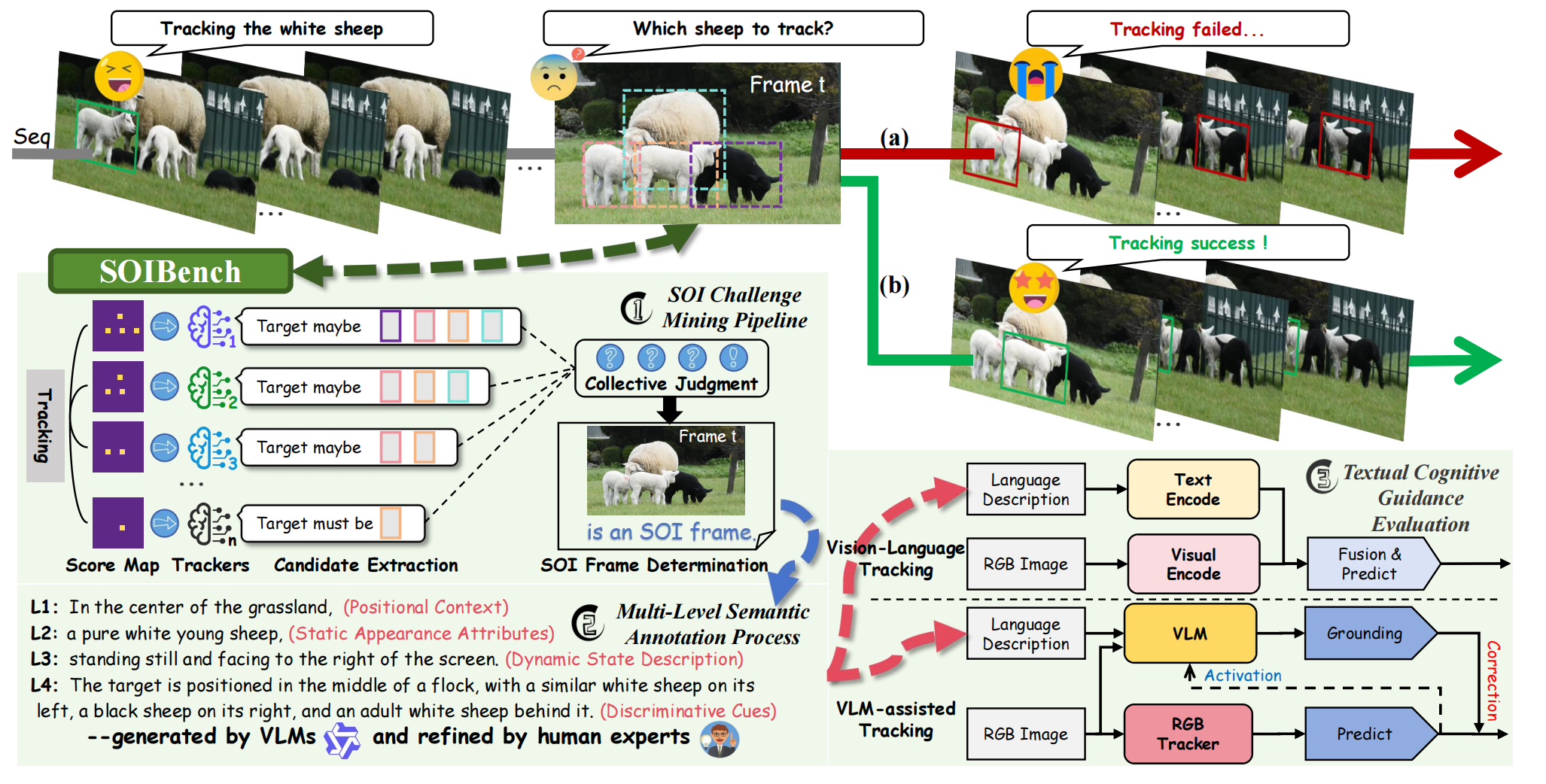

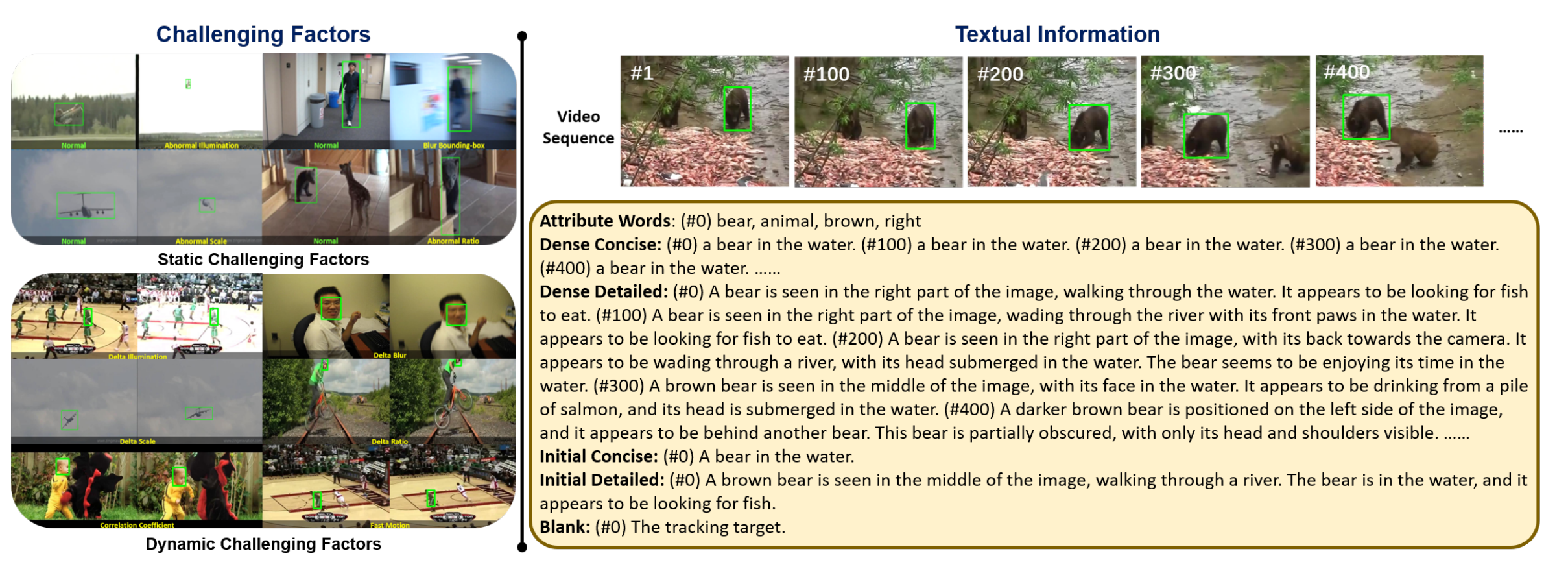

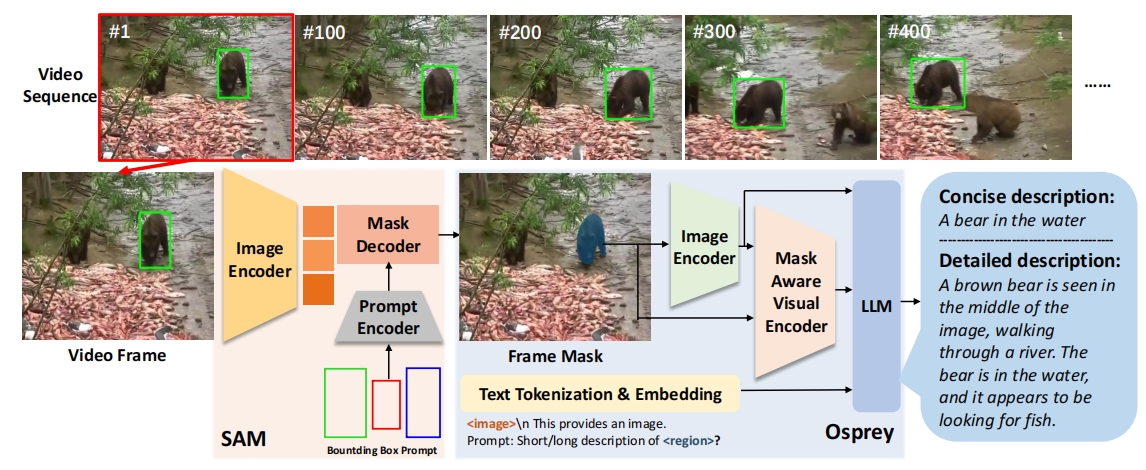

A Multi-modal Global Instance Tracking Benchmark (MGIT): Better Locating Target in Complex Spatio-temporal and causal Relationship

Shiyu Hu, D. Zhang, M. Wu, X. Feng, X. Li, X. Zhao, K. Huang

Conference on Neural Information Processing Systems (CCF-A Conference, Poster)

📌 Visual Language Tracking 📌 Long Video Understanding and Reasoning 📌 Hierarchical Semantic Information Annotation

📃 Paper 📃 PDF 🪧 Poster 📹 Slides 🌐 Platform 🔧 Toolkit 💾 Dataset

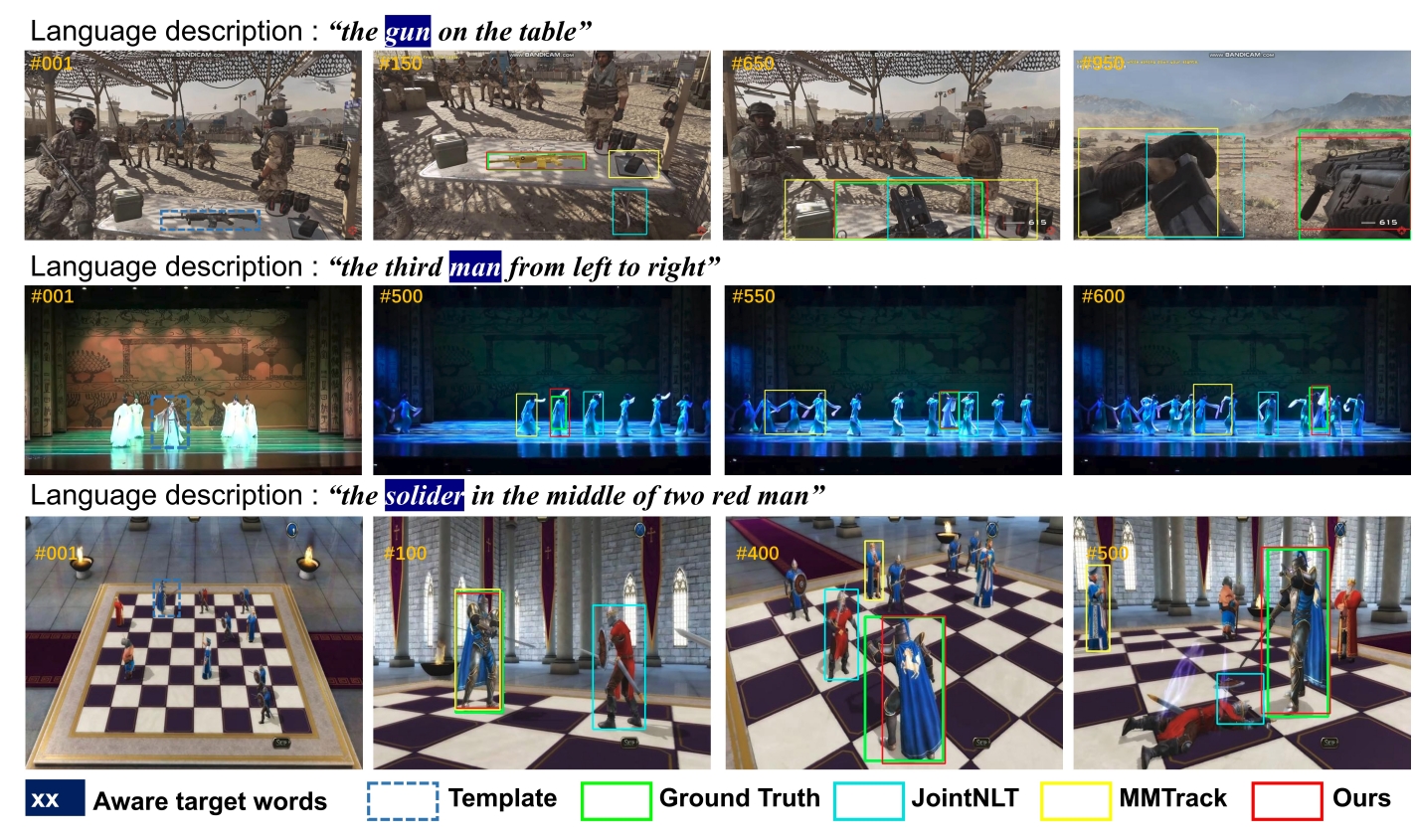

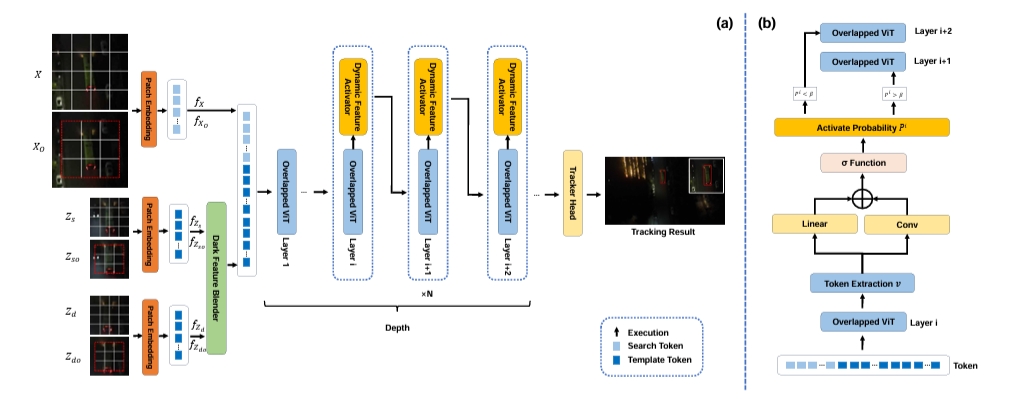

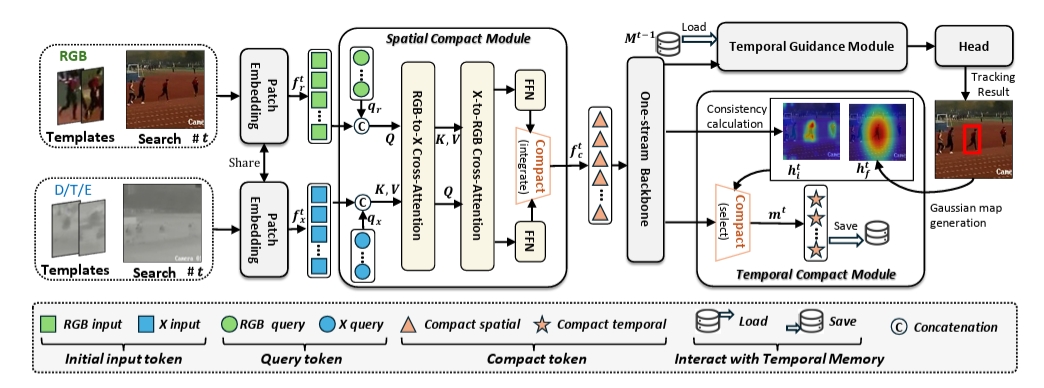

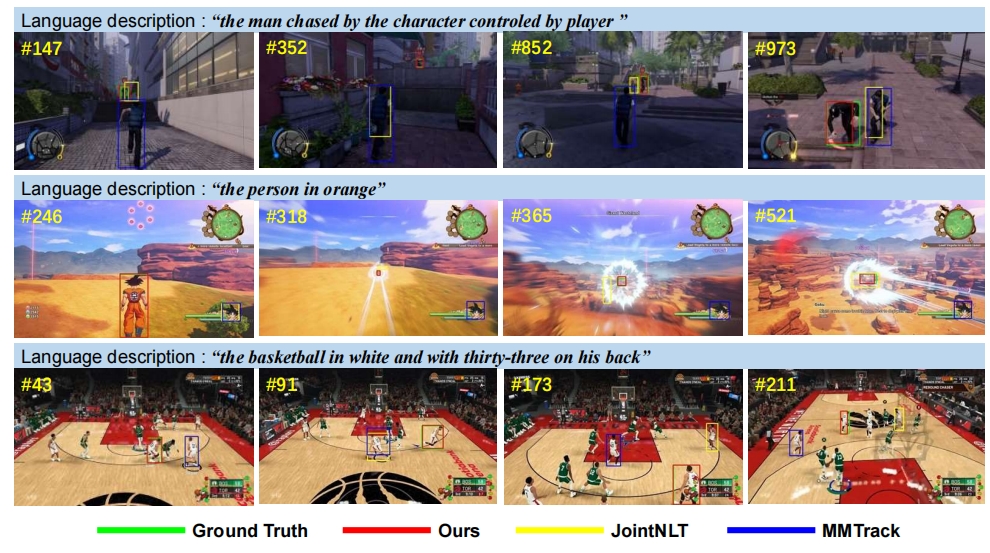

ATCTrack: Aligning Target-Context Cues with Dynamic Target States for Robust Vision-Language Tracking

X. Feng*, Shiyu Hu*, X. Li, D. Zhang, M. Wu, J. Zhang, X. Chen, K. Huang (*Equal Contributions)

International Conference on Computer Vision (CCF-A Conference, Highlight)

📌 Visual Language Tracking 📌 Multimodal Learning 📌 Adaptive Prompts

📃 Paper 📑 PDF

Visual Intelligence Evaluation Techniques for Single Object Tracking: A Survey (单目标跟踪中的视觉智能评估技术综述)

Shiyu Hu, X. Zhao, K. Huang

Journal of Images and Graphics (《中国图象图形学报》, CCF-B Chinese Journal)

📌 Visual Object Tracking 📌 Intelligent Evaluation Technique 📌 AI4Science

📃 Paper 📑 PDF

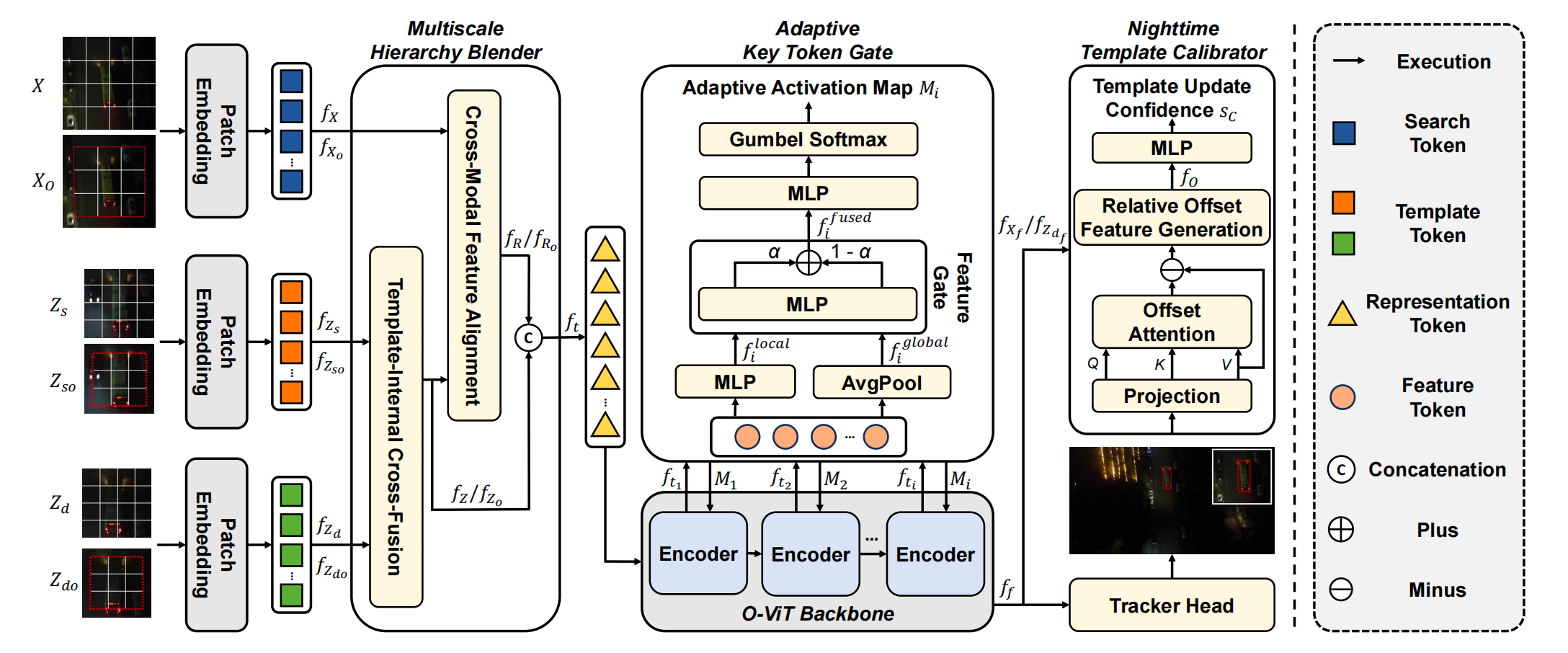

DARTer: Dynamic Adaptive Representation Tracker for Nighttime UAV Tracking

X. Li*, X. Li*, Shiyu Hu✉️

International Conference on Multimedia Retrieval (CCF-B Conference)

📌 Nighttime UAVs Tracking 📌 Dark Feature Blending 📌 Dynamic Feature Activation

📃 Paper 📑 PDF

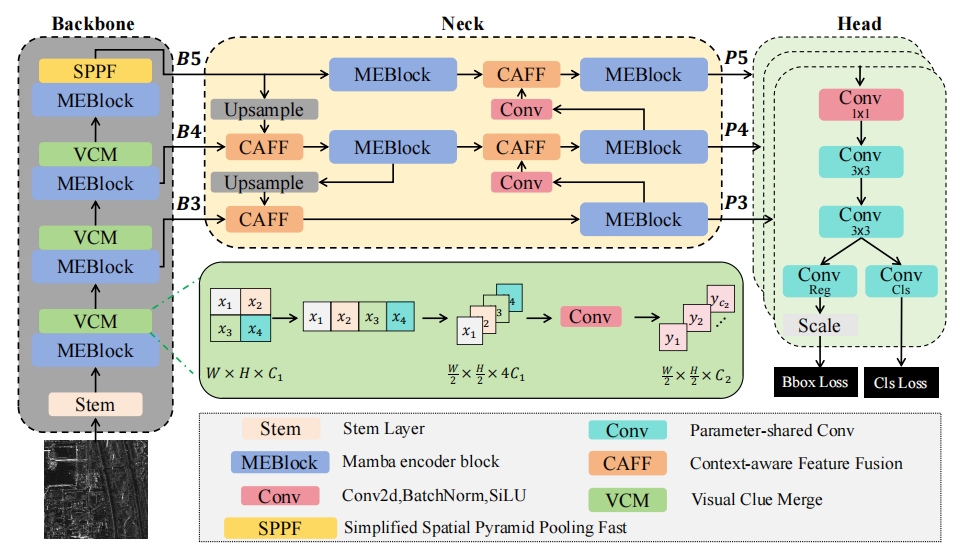

Improved SAR Aircraft Detection Algorithm Based on Visual State Space Models

Y. Wang, J. Zhang, Y. Wang, Shiyu Hu✉️, B. Shen, Z. Hou, W. Zhou

IET Computer Vision (CCF-C Journal)

📌 Synthetic Aperture Radar 📌 State Space Models 📌 Aircraft Object Detection

MemVLT: Vision-Language Tracking with Adaptive Memory-based Prompts

X. Feng, X. Li, Shiyu Hu, D. Zhang, M. Wu, J. Zhang, X. Chen, K. Huang

Conference on Neural Information Processing Systems (CCF-A Conference, Poster)

📌 Visual Language Tracking 📌 Human-like Memory Modeling 📌 Adaptive Prompts

📃 Paper 📑 PDF

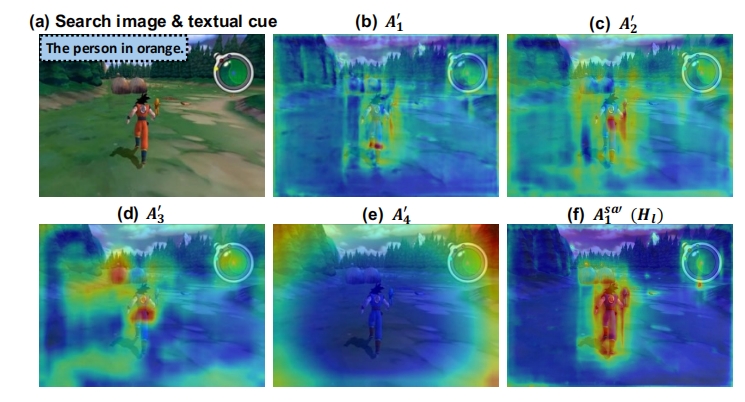

Enhancing Vision-Language Tracking by Effectively Converting Textual Cues into Visual Cues

X. Feng, D. Zhang, Shiyu Hu, X. Li, M. Wu, J. Zhang, X. Chen, K. Huang

IEEE International Conference on Acoustics, Speech, and Signal Processing (CCF-B Conference, Poster)

📌 Visual Language Tracking 📌 Multi-modal Learning 📌 Grounding Model

📃 Paper 📃 PDF

Robust Single-particle Cryo-EM Image Denoising and Restoration

J. Zhang, T. Zhao, Shiyu Hu, X. Zhao

IEEE International Conference on Acoustics, Speech, and Signal Processing (CCF-B Conference, Poster)

📌 Medical Image Processing 📌 AI4Science 📌 Diffusion Model

📃 Paper 📑 PDF

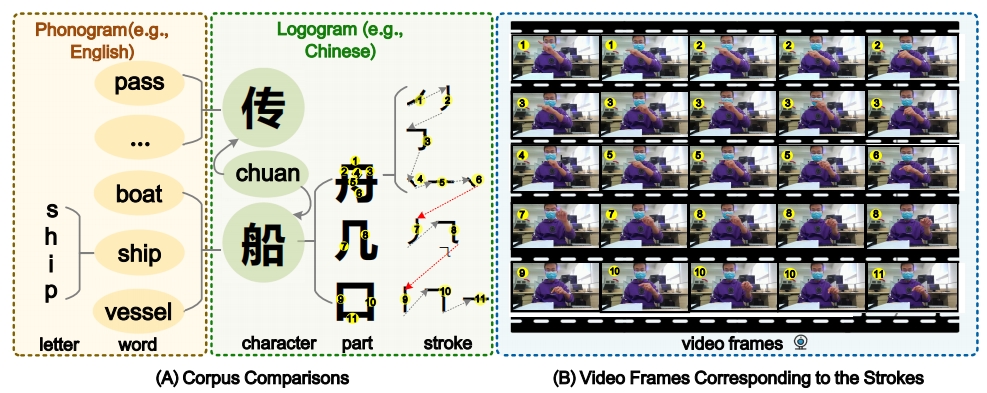

Finger in Camera Speaks Everything: Unconstrained Air-Writing for Real-World

M. Wu, K. Huang, Y. Cai, Shiyu Hu, Y. Zhao, W. Wang

IEEE Transactions on Circuits and Systems for Video Technology (CCF-B Journal)

📌 Air-writing Technique 📌 Benchmark Construction 📌 Human-machine Interaction

📃 Paper 📃 PDF 🔧 Toolkit

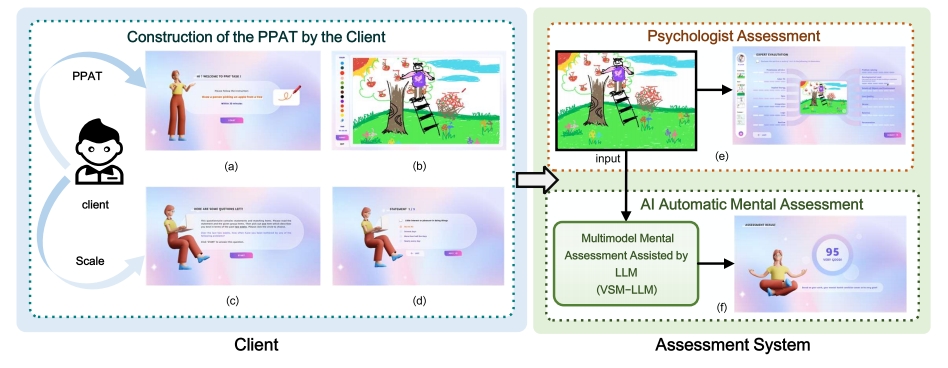

VS-LLM: Visual-Semantic Depression Assessment based on LLM for Drawing Projection Test

M. Wu, Y. Kang, X. Li, Shiyu Hu, X. Chen, Y. kang, W. Wang, K. Huang

Chinese Conference on Pattern Recognition and Computer Vision (CCF-C Conference)

📌 Psychological Assessment System 📌 Gamified Assessment 📌 AI4Science

📃 Paper 📃 PDF

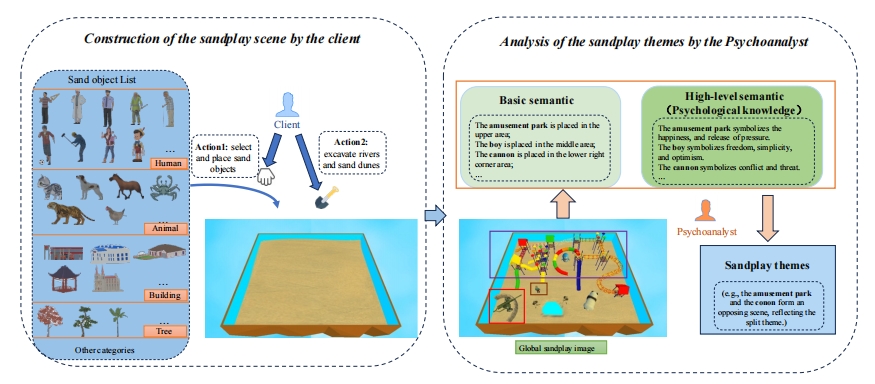

A Hierarchical Theme Recognition Model for Sandplay Therapy

X. Feng, Shiyu Hu, X. Chen, K. Huang

Chinese Conference on Pattern Recognition and Computer Vision (CCF-C Conference, Poster)

📌 Psychological Assessment System 📌 Gamified Assessment 📌 AI4Science

📃 Paper 📑 PDF 🔖 Supplementary 🪧 Poster

Artificial Intelligence-Enabled Adaptive Learning Platforms: A Review

L. Tan, Shiyu Hu, Darren J. Yeo, KH Cheong

Computers & Education: Artificial Intelligence

📌 Adaptive Learning Platforms 📌 AI for Education 📌 Educational Technology

📃 Paper 📑 PDF

A Comprehensive Review on Automated Grading Systems in STEM Using AI Techniques

L. Tan, Shiyu Hu, Darren J. Yeo, KH Cheong

Mathematics

📌 Automated Grading Systems 📌 AI for Education 📌 Educational Technology

📃 Paper

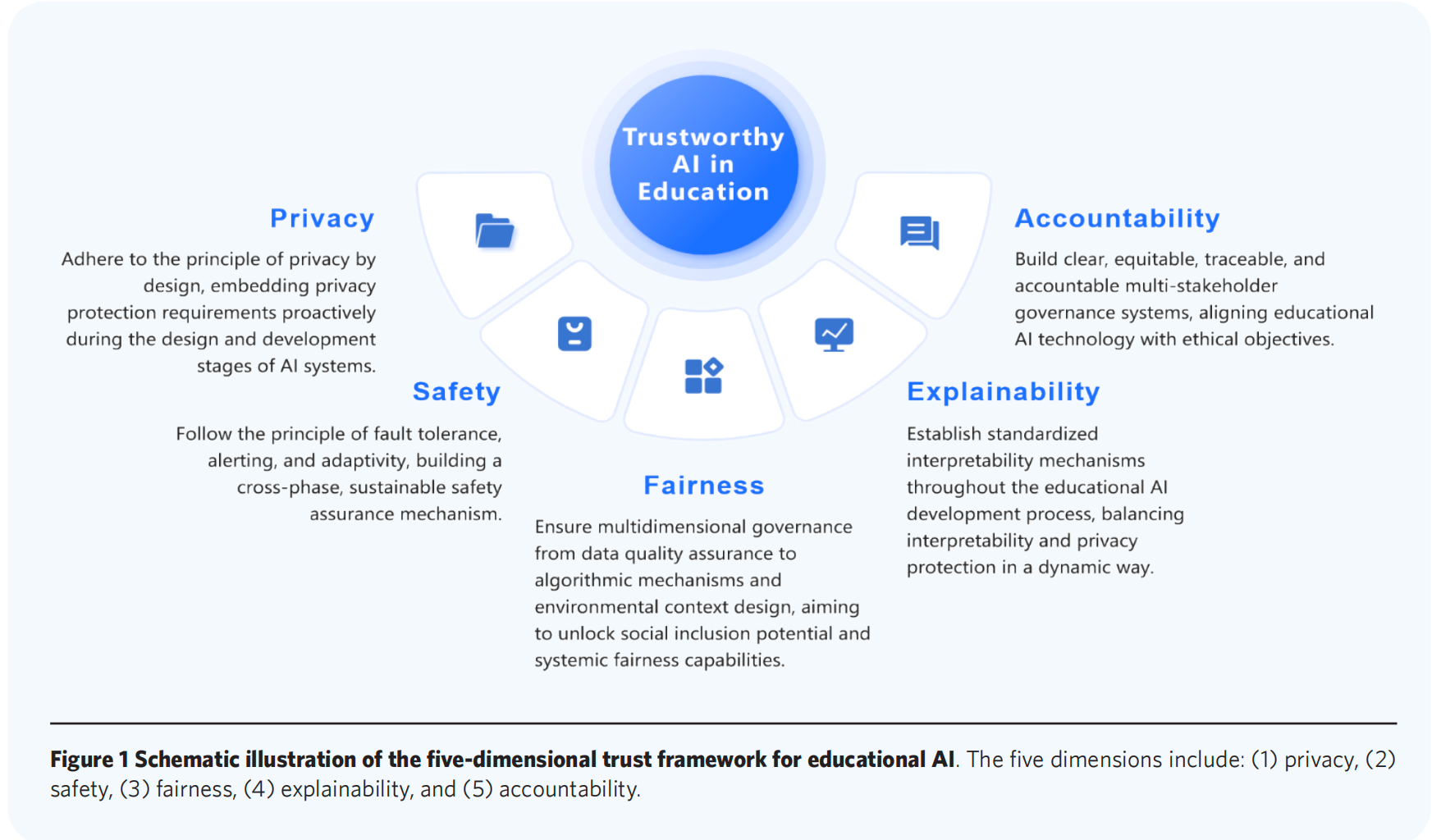

Trustworthy AI in education: Framework, cases, and governance strategies

Y. Ma, X. Li, Shiyu Hu, S. Liu, KH Cheong

Innovation and Emerging Technologies

📌 Trustworthy Artificial Intelligence 📌 Educational Governance 📌 Algorithmic Fairness;

📃 Paper

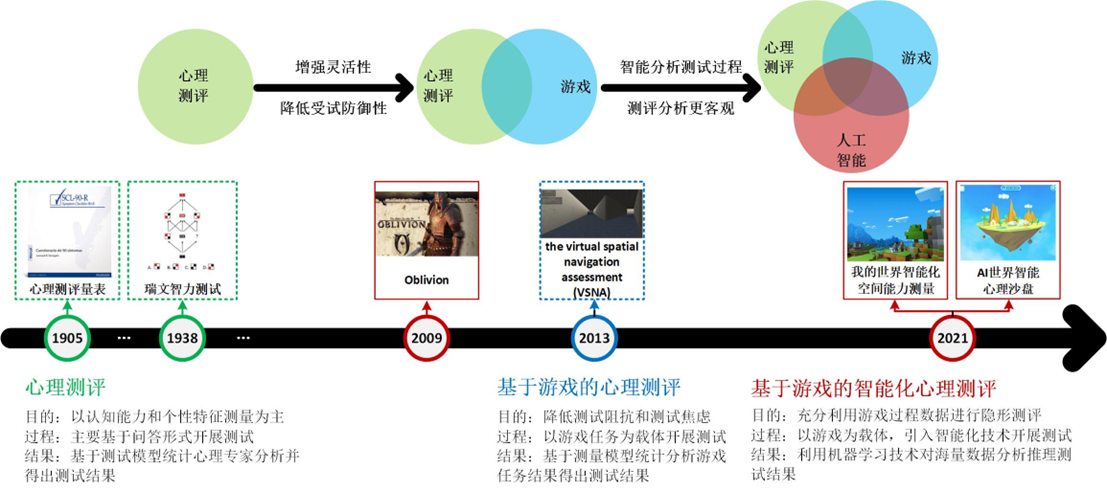

A Review of Intelligent Psychological Assessment Based on Interactive Environment (基于交互环境的智能化心理测评)

K. Huang, Y. Kang, C. Yan, Shiyu Hu, L. Wang, T. Tao, W. Gao

Chinese Mental Health Journal (《中国心理卫生杂志》, CSSCI Journal, Top Psychological Journal in China)

📌 Psychological Assessment System 📌 Gamified Assessment 📌 AI4Science

Workshop

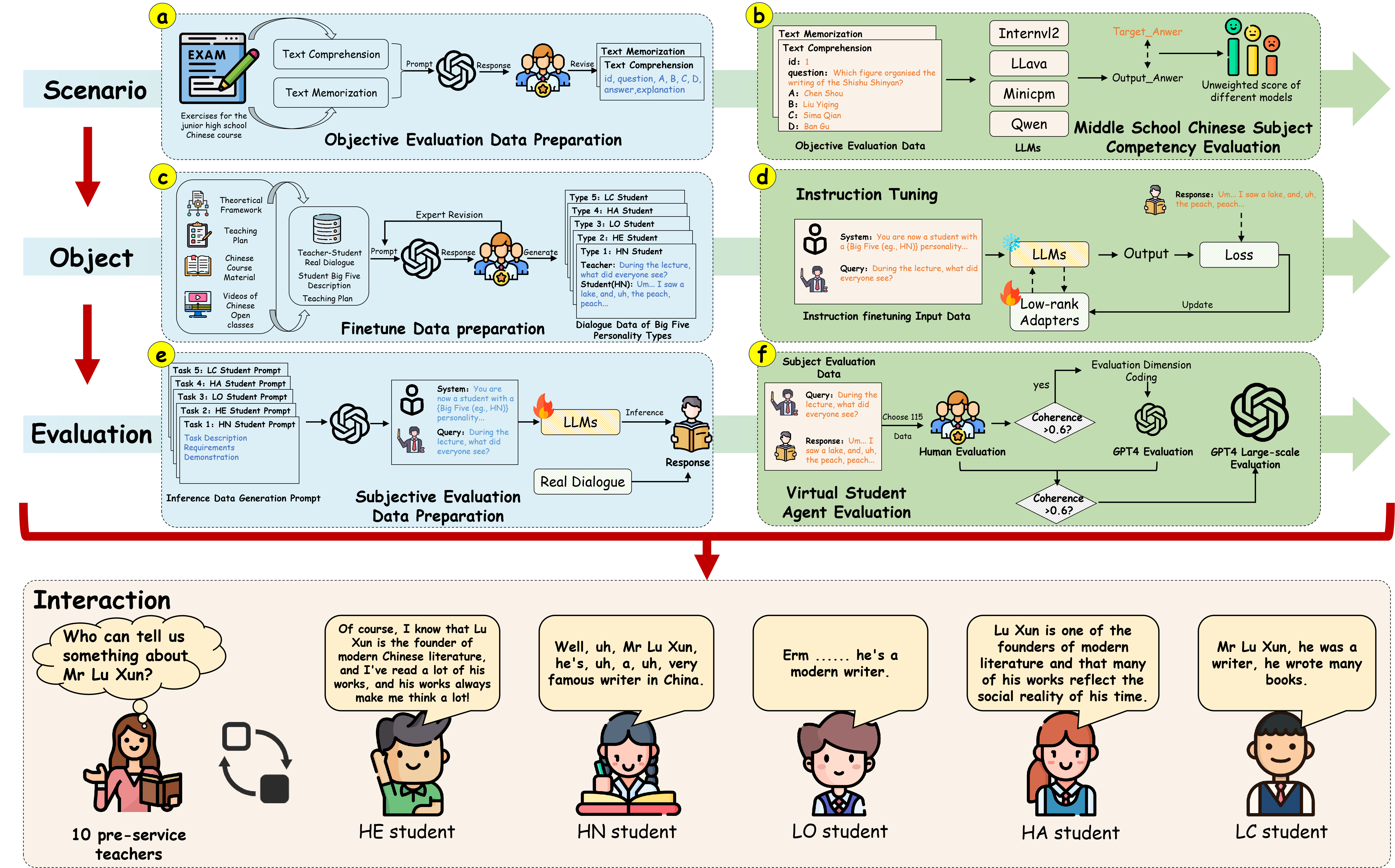

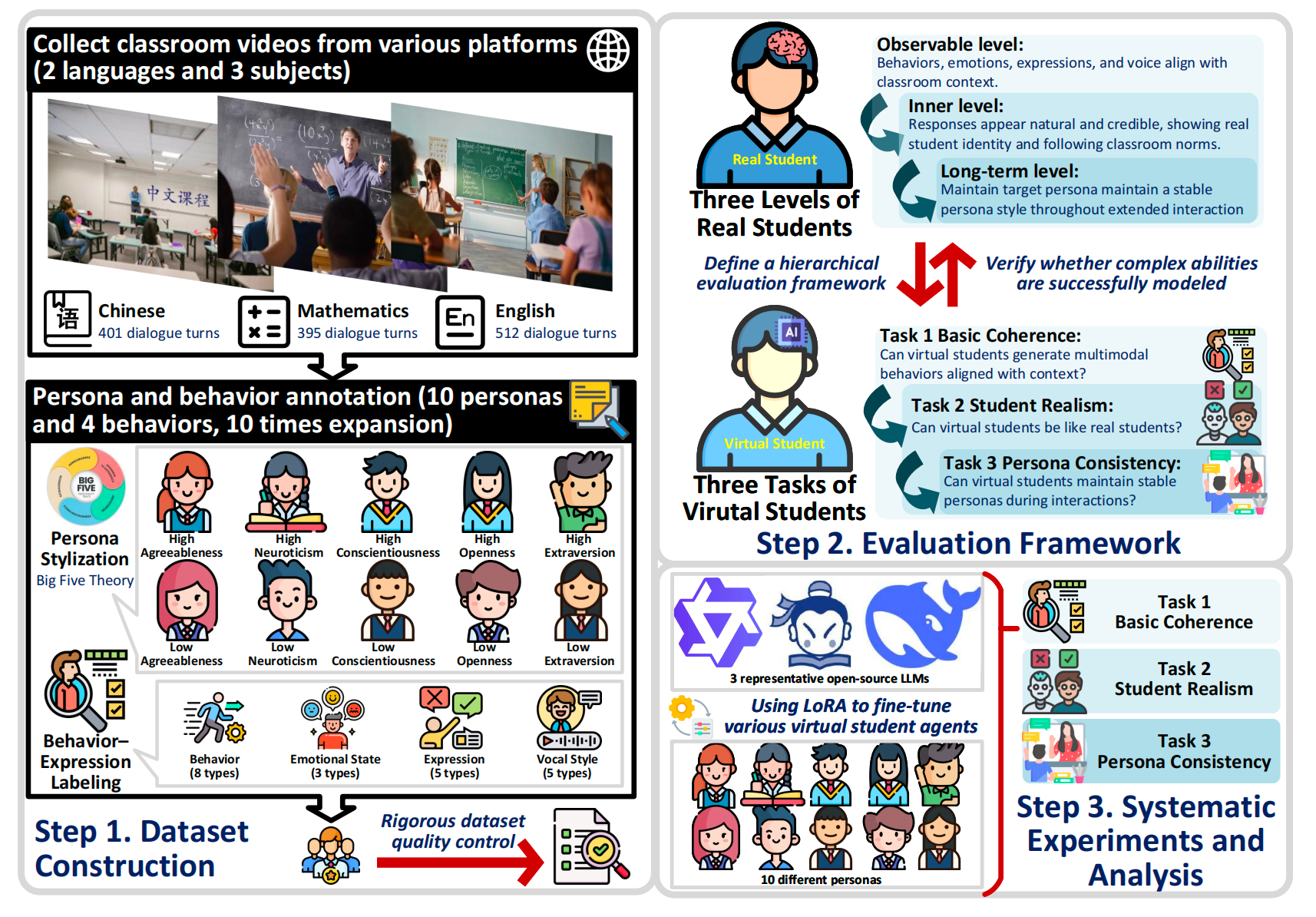

AAAIW 2026Learning to Be Taught: A Structured SOEI Framework for Modeling and Evaluating Personality-Aligned Virtual Student Agents, Y. Ma*, Shiyu Hu*, X. Li, Y. Wang, Y. Chen, S. Liu, KH Cheong (*Equal Contributions), the AI for Education Workshop in the 40th Annual AAAI Conference on Artificial Intelligence (Workshop in CCF-A Conference)AAAIW 2026Redefining Educational Simulation: EduVerse as a User-Defined and Developmental Multi-Agent Simulation Space, Y. Ma*, Shiyu Hu*, B. Zhu, Y. Wang, Y. Kang, S. Liu, KH Cheong (*Equal Contributions), the AI for Education Workshop in the 40th Annual AAAI Conference on Artificial Intelligence (Workshop in CCF-A Conference)AAAIW 2026From Objective to Subjective: A Benchmark for Virtual Student Abilities, B. Zhu*, Shiyu Hu*, Y. Ma, Y. Zhang, KH Cheong (*Equal Contributions), the AI for Education Workshop in the 40th Annual AAAI Conference on Artificial Intelligence (Workshop in CCF-A Conference)CVPRW 2024Diverse Text Generation for Visual Language Tracking Based on LLM, X. Li, X. Feng, Shiyu Hu, M. Wu, D. Zhang, J. Zhang, K. Huang, the 3rd Workshop on Vision Datasets Understanding and DataCV Challenge in CVPR 2024 (Workshop in CCF-A Conference, Oral, Best Paper Honorable Mention), 📃 Paper 📃 PDF 🪧 Poster 📹 Slides 🌐 Platform 🔧 Toolkit 💾 Dataset 🏆 Award

Preprint

⚙️ Projects

The list here mainly includes engineering projects, while more academic projects have already been published in the form of research papers. Please refer to the 📝 Publications for more information.

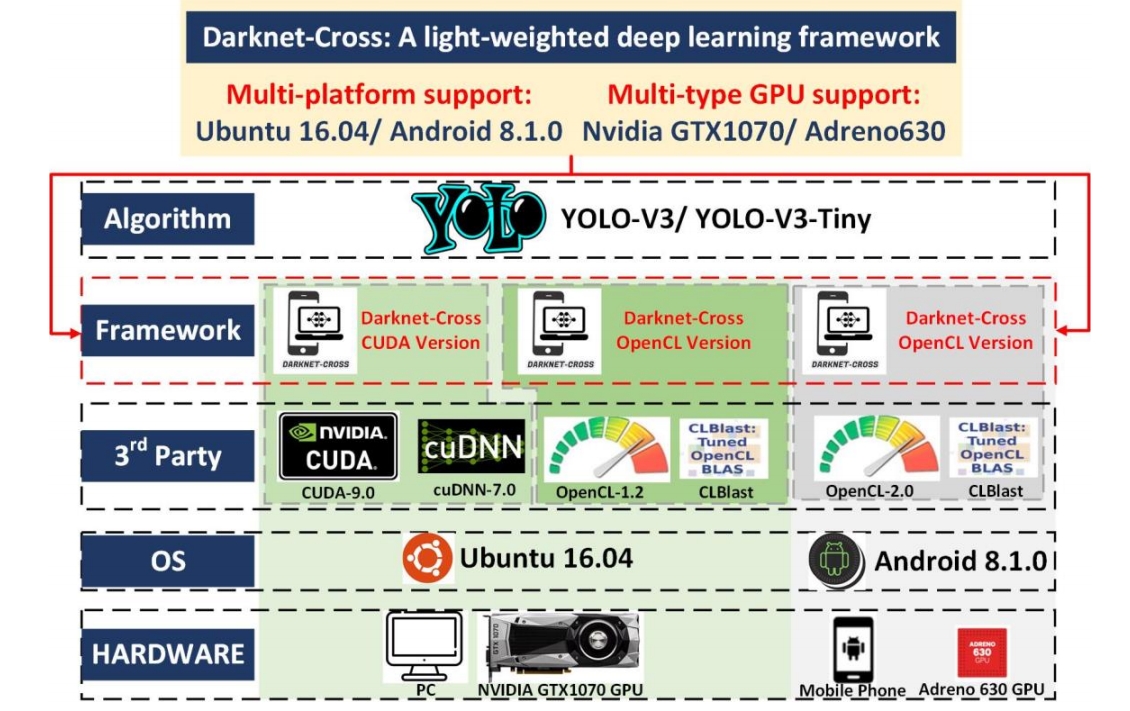

Darknet-Cross: Light-weight Deep Learning Framework for Heterogeneous Computing

📌 High-performance Computing 📌 Heterogeneous Computing 📌 Deep learning Framework

- Darknet-Cross is a lightweight deep learning framework, mainly based on the open-source deep learning algorithm library Darknet and yolov2_light, and it has been successfully ported to mobile devices through cross-compilation. This framework enables efficient algorithm inference using mobile GPUs.

- Darknet-Cross supports algorithm acceleration processing on various platforms (e.g., Android and Ubuntu) and various GPUs (e.g., Nvidia GTX1070 and Adreno 630).

- The work is a part of my master’s thesis at the University of Hong Kong (thesis defense grade: A+).

A Skin Color Detection System without Color Atla

📌 Color Constancy 📌 Skin Color Detection 📌 Illumination Estimation

- Under 18 different environmental lighting conditions and with 4 combinations of smartphone parameters, skin color data was collected from 110 participants. The skin color dataset consists of 7,920 images, with the testing results from CK Company’s MPA9 skin color detector serving as the ground truth for user skin colors.

- Using an elliptical skin model, the essential skin regions are extracted from the images. The open-source color constancy model, FC4, is employed to recover the environmental lighting conditions. Subsequently, the skin color detection results for users are calculated using SVR regression.

- The related work has been successfully deployed in Huawei’s official mobile application ‘Mirror’ for its AI skin testing function.

A Project for Cell Tracking Based on Deep Learning Method

📌 Medical Image Processing 📌 AI4Science 📌 Cell Segmentation and Tracking

- This method follows the tracking by detection paradigm and combines per-frame CNN prediction for cell segmentation with a Siamese network for cell tracking.

- This project was submitted to the cell tracking challenge in Mar. 2021, and maintains the second place in the Fluo-C2FL-MSC+ dataset and the third place in the Fluo-C2FL-Huh7 dataset (statistics by Oct. 2023).

Research on the Dilemma and Countermeasures of Human-Computer Interaction in Intelligent Education

📌 Intelligent Education Technology 📌 Human-Computer Interaction 📌 AI4Science

- Incorporating insights and methodologies from education, cognitive psychology, and computer science, this project establishes a theoretical framework for understanding the evolution of HCI within the intelligent education.

- Drawing upon the established theoretical framework, this project conducts a comprehensive analysis of the evolution of HCI in educational settings, transitioning from collaboration to integration. Furthermore, it delves into the key issues arising from this transformative process within the realm of intelligent education.

- Building upon the core issues unearthed, this project investigates strategies for leveraging theoretical guidance and technical enhancements to enhance the efficacy of HCI in intelligent education, ultimately striving towards effective human-computer integration.

- The project is funded by the 2023 Intelligent Education PhD Research Fund, supported by the Institute of AI Education Shanghai and East China Normal University, and is currently in progress.

🏆 Honors and Awards

- 2025 IEEE SMCS TEAM Program Award by the IEEE Systems, Man, and Cybernetics Society

- 2024 Best Paper Honorable Mention in the 3rd Workshop on Vision Datasets Understanding and DataCV Challenge in CVPR 2024 (CVPRW最佳论文提名)

- 2024 Beijing Outstanding Graduates (北京市优秀毕业生, top 5%)

- 2023 China National Scholarship (国家奖学金, top 1%, only 8 Ph.D. students in main campus of University of Chinese Academy of Sciences win this scholarship)

- 2023 First Prize of Climbing Scholarship in Institute of Automation, Chinese Academy of Sciences (攀登一等奖学金, only 6 students in Institute of Automation, Chinese Academy of Sciences win this scholarship)

- 2022 Merit Student of University of Chinese Academy of Sciences (中国科学院大学三好学生)

- 2017 Excellent Innovative Student of Beijing Institute of Technology (北京理工大学优秀创新学生)

- 2016 College Scholarship of Chinese Academy of Sciences (中国科学院大学生奖学金)

- 2016 Excellent League Member on Youth Day Competition of Beijing Institute of Technology (北京理工大学优秀团员)

- 2015 National First Prize in Contemporary Undergraduate Mathematical Contest in Modeling (CUMCM) (全国大学生数学建模竞赛国家一等奖, top 1%, only 1 team in Beijing Institute of Technology win this prize) [📑PDF] [📖Selected and Reviewed Outstanding Papers in CUMCM (2011-2015) (Chapter 9)]

- 2015 First Prize of Mathematics Modeling Competition within Beijing Institute of Technology (北京理工大学数学建模校内选拔赛第一名)

- 2015 Outstanding Individual on Summer Social Practice of Beijing Institute of Technology (北京理工大学暑期社会实践优秀个人)

- 2015 Second Prize on Summer Social Practice of Beijing Institute of Technology (北京理工大学暑期社会实践二等奖, team leader)

- 2015 Outstanding Student Cadre of Beijing Institute of Technology (北京理工大学优秀学生干部)

- 2015 Outstanding League Cadre on Youth Day Competition of Beijing Institute of Technology (北京理工大学优秀团干部)

- 2015 Outstanding Youth League Branch of Beijing Institute of Technology (北京理工大学优秀团支部, team leader)

- 2015 Top-10 Activities on Youth Day Competition of Beijing Institute of Technology (北京理工大学十佳团日活动, team leader)

- 2014 Outstanding Student of Beijing Institute of Technology (北京理工大学优秀学生)

- 2014, 2015, 2016, 2017 Academic Scholarship of Beijing Institute of Technology (北京理工大学学业奖学金)

📣 Activities and Services

Tutorial

34th International Joint Conference on Artificial Intelligence (IJCAI)

- Title: Human-Centric and Multimodal Evaluation for Explainable AI: Moving Beyond Benchmarks

- Date & Location: 14:00-15:30, 18th August, 2025, Montreal, Canada

28th European Conference on Artificial Intelligence (ECAI)

- Title: From Benchmarking to Trustworthy AI: Rethinking Evaluation Methods Across Vision and Complex Systems

- Date & Location: 26th October, 2025, Bologna, Italy

🌐 Webpage

2025 IEEE International Conference on Systems, Man, and Cybernetics (SMC)

- Title: The Synergy of Large Language Models and Evolutionary Optimization on Complex Networks

- Date & Location: 5th October, 2025, Vienna, Austria

31th IEEE International Conference on Image Processing (ICIP)

- Title: An Evaluation Perspective in Visual Object Tracking: from Task Design to Benchmark Construction and Algorithm Analysis

- Date & Location: 9:00-12:30, 27th October, 2024, Abu Dhabi, United Arab Emirates

- Duration: Half-day

📹 Slides 🌐 Webpage

27th International Conference on Pattern Recognition (ICPR)

- Title: Visual Turing Test in Visual Object Tracking: A New Vision Intelligence Evaluation Technique based on Human-Machine Comparison

- Date & Location: 14:30-18:00, 1st December, 2024, Kolkata, India

- Duration: Half-day

📹 Slides

17th Asian Conference on Computer Vision (ACCV)

- Title: From Machine-Machine Comparison to Human-Machine Comparison: Adapting Visual Turing Test in Visual Object Tracking

- Date & Location: 9:00-12:00, 9th December, 2024, Hanoi, Vietnam

- Duration: Half-day

📹 Slides 🌐 Webpage

Mini-Symposium

The Fifth International Nonlinear Dynamics Conference (NODYCON 2026)

- Title: Complex Network Systems and Large Language Models

- Date & Location: 20th-23rd, September, 2026, Sapienza University of Rome, Italy

🌐 Webpage

Guest Editor

- Journals: Electronics (Special Issue: Techniques and Applications of Multimodal Data Fusion)

Associate Editor

- Journals: Innovation and Emerging Technologies

Reviewer

- Conferences: NeurIPS, ICML, ICLR, CVPR, ECCV, ICCV, AAAI, IJCAI, ACMMM, ICRA, AISTATS, etc.

- Journals: IEEE Transactions on Image Processing, SCIENCE CHINA Information Sciences, IEEE Transactions on Network Science and Engineering, IEEE Transactions on Vehicular Technology, Information Fusion, Engineering Applications of Artificial Intelligence, Expert Systems with Applications, Neurocomputing, Knowledge-Based Systems, Scientific Reports, etc.

Member

- Societies: Institute of Electrical and Electronics Engineers (IEEE, No.97803543), China Society of Image and Graphics (CSIG, No.E651129499M), Chinese Association for Artificial Intelligence (CAAI, No.E660120827A), China Computer Federation (CCF, No.Z1771M).

📄 CV

✉️ Contact

- shiyu.hu@ntu.edu.sg (Main)

- hushiyu199510@gmail.com (Personal)

hushiyu2019@ia.ac.cn(Valid from 2019.06 - 2024.07)

My homepage visitors recorded from April 18th, 2024. Thanks for attention.

© Shiyu Hu | Last updated: 2025-12